One of the most difficult challenges for any sector is trying to alert an audience without skills in ‘cyber’ to the presence of unusual activity that might suggest a hack or other security tampering.

What if we could alert a ship’s captain, an airplane pilot, car driver or machine operator that something might be up? A reason to be suspicious of their instruments or look more carefully at their controls?

Ships

A comparative might be the ECDIS (ships digital map) losing GPS and alarming, so the position fix could no longer be trusted. The captain is now more alert and cross checks using multiple systems, including radar and taking visual position fixes if near land.

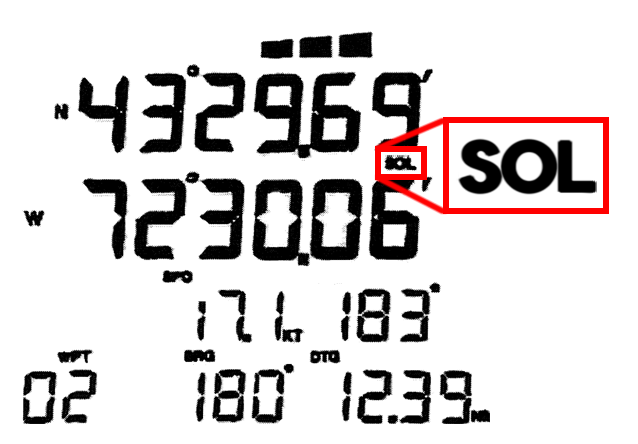

Read up on the Royal Majesty incident – the crew failed to notice that the GPS antenna had failed, so the system switched from Satellite OnLine mode (SOL) to dead reckoning (‘DR’) mode. The DR indicator and related alarm were easily missed.

Various external visual cues caused the officers to believe that the ship was on course, when in fact it was some way off course and then ran aground. A trivial issue started a chain of events that could have been prevented with alerting, cyber or not.

‘Screen fixation’ is a very real problem in many industries, as systems have become digitised fast. It’s even more of a problem as younger generations enter industry, who have grown up with tablets, smartphones and other computers. Experienced ships captains will use all sources of information, including looking out of the window, yet they will often report that more junior officers implicitly trust the electronic charts over everything else.

One of the major problems I have had with raising cyber awareness in shipping is getting experienced captains to appreciate that hacking isn’t like the movies. Your ECDIS won’t pop up with a skull and crossbones. Your ship won’t steer itself automatically to the middle of the Atlantic and await a James Bond-style evil villain. Genuinely targeted hacking is usually subtle, or happens at the worst possible moment, say when navigating a constricted shipping lane.

Having that early alert that something is up can improve the operators response.

Planes

As a pilot, I’ve experienced the same: usable cockpit moving maps were just emerging as I qualified. It’s very easy to lose classic navigation skills, overlooking radio navaids (VOR/NDB etc) in favour of the accuracy of a GPS moving map. That’s all fine until you forget to update the airspace database, the battery dies or it malfunctions / misreports position for whatever reason.

The same might be useful on aircraft: we don’t expect a commercial pilot to be cyber-skilled, but we may be able to raise their attention. Pilots are taught to continually scan their instruments and correlate position, yet we still become screen fixated and trust the digital systems. Tunnel vision and focus on a single problem have been contributory causes to many incidents.

Mis-diagnosis of issues can start a cascade that leads to disaster. The loss of Air France flight 447 is a salutary lesson: an iced up pitot led to misreading airspeed indications and started a chain of events that led to a crash.

Cars

Is there also a place for similar alerting in other connected industries and devices? I question whether there is a place in cars, though as autonomy increases, perhaps there is a case in future:

An autonomous vehicle is attacked. Its steering motors are tampered with, it crashes at speed. Is there a case for alerting the driver to take control, or at least hit an emergency stop button if there is no manual control option? The opening episode of the ‘Upload’ TV show on Amazon Prime shows a remarkably similar incident.

However, given that many drivers already ignore minor alerts from their vehicle, would they simply ignore yet another flashing light? Defer it for the next trip to the repair shop?

Yet, if there is a compromise, would the attacker simply deactivate the alerts? It’s worth considering Amazon’s Echo here – the microphone ‘mute’ button is one of the few hard-wired connections. All other controls are managed in software, yet Amazon decided that the mic mute was so important for privacy that it could not be trusted to software alone. We should learn from Amazon.

Having triggers available to raise operator awareness of inconsistencies in their instruments could be very valuable. It could also be very dangerous and cause the very fixation we are trying to avoid.

There remains the biggest challenge of all though: determining how to detect anomalies and only report those that are indicative of a cyber compromise.

Any system that misreports, or errs too far on the side of caution runs the risk of false positives. Crews and operators will quickly distrust or even ignore the alert, giving it little value.

Some errors will be simple to highlight: a position mismatch between systems, a variance between speeds, a data feed that has stopped or clearly anomalous traffic with known bad features, but most will be very hard to detect

What is an error that should be attributed to a cyber incident? What is just an error? What is normal? Vendors have spent years building intrusion detection systems for IT networks with varying success as the arms race with the attacker continues. To then create a template of ‘normal’ in a completely new environment such as ARINC 429 / AFDX / NMEA-0183 / CAN / serial etc is a giant leap.

Conclusion

Perhaps the first step is to start gathering data in an attempt to define ‘normal’ – by logging and analysing data for cyber incidents, we stand a chance of getting started. Right now, to my knowledge, this gathering of data is either non-existent or in its infancy in the automotive, aviation and shipping industries.

With all that said, the canary in the mine saved the lives of many miners, so it’s a goal worth pursuing.