TL;DR

Docker Desktop for Windows suffers from a privilege escalation vulnerability to SYSTEM. The core of the issue lies with the fact that the Docker Desktop Service, the primary Windows service for Docker, communicates as a client to child processes using named pipes.

The high privilege Docker Desktop Service can be tricked into connecting to a named pipe that has been setup by a malicious lower privilege process. Once the connection is made, the malicious process can then impersonate the Docker Desktop Service account (SYSTEM) and execute arbitrary system commands with the highest level privileges.

Here’s a video of the PoC in action:

The vulnerability has been assigned CVE-2020-11492 and the latest Docker Desktop Community and Enterprise have fixed the issue.

When Docker Desktop for Windows is installed, a Windows service called Docker Desktop Service is installed. This service is always running by default, waiting idly by for the Docker Desktop application to start.

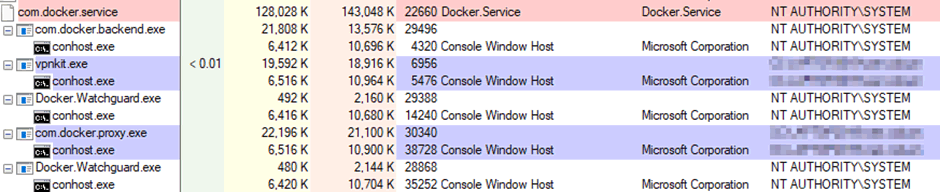

Once the Docker Desktop application is started many other child processes are created, which allow the management of various Docker behaviours from docker image creation to watchdog processes.

Here’s a typical Docker Desktop process tree after launch:

Pipe Dream

When applications launch child processes, it is not uncommon to use Windows named pipes as a form of inter process communication (IPC). Like TCP/IP, named pipes offer the ability to send and receive data down the pipe with application specific data. Named pipes also work over the network too. But in Dockers case, it connects to named pipes on the same machine as a form of IPC between the child processes.

Now this is where it starts to get interesting. Named pipes have a unique feature that allow the server side of the connection to impersonate the client account who is connecting. Why does this exist? Well it’s quite simple. Many services that are running on Window are offering functionality to users of the machine, be it local or remote. The impersonation functionality allows the service to drop its credentials in favour of the connecting client. When files or other various restricted operating system functionality is requested, the action is performed under the impersonated account and not the service account that the process was launched under.

First Impressions are Important

Impersonation is not something any standard user account can perform, it’s a special privilege that must be assigned to accounts. The specific right is called “Impersonate a client after authentication” and is part of the User Rights Assignment item within Group Policy Editor.

Here is the list of accounts by default that have the impersonate privileges enabled.

• Administrators

• Local Service

• Network Service

• IIS AppPool Account

• Microsoft SQL Server Account

• Service

Many of the accounts above are designed to be limited accounts with minimal privileges, for example Network Service has very little access to a machine’s resources. Same goes for the IIS AppPool accounts, these are usually used when serving applications from IIS web apps. The last one is the most interesting though. This is what the description in Group Policy Editor has for the built-in Service group.

Note: By default, services that are started by the Service Control Manager have the built-in Service group added to their access tokens. Component Object Model (COM) servers that are started by the COM infrastructure and that are configured to run under a specific account also have the Service group added to their access tokens. As a result, these services get this user right when they are started.

Yes, you have read that correctly. Anything started by the Service Control Manager will automatically get the impersonation privilege, no matter which account is used to start the service.

Impersonate the Dockmaster

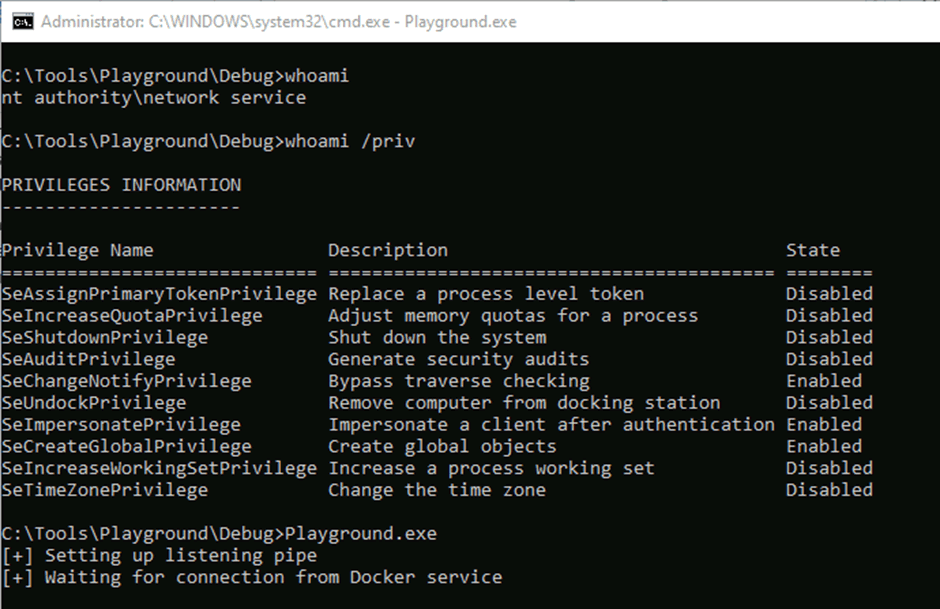

OK, back to Docker. As mentioned above, when the Docker Desktop app starts, it spawns a bunch of child processes. When these are launched the main Docker service is expecting the child processes to create the named pipes for IPC purposes. The high privilege service will then connect to these named pipes as the client and is not serving them. So, if a malicious piece of code can execute under the context of a process with impersonate privileges, it can setup a pipe called \\.\pipe\dockerLifecycleServer and wait for it to connect.

The PoC waiting for connection:

OK I hear some of the sceptical out there: “But you need Administrator rights to create such a service”. Well let’s say you happen to be hosting a vulnerable IIS Web Application on the same machine as Docker for Windows. This could be one example of a successful attack vector. The initial attack vector could utilise a vulnerability in the web application to perform code execution under the limited IIS App Pool account. Once that is achieved, our special Docker named pipe can be setup to perform the privilege escalation to SYSTEM.

Stealing the Ship

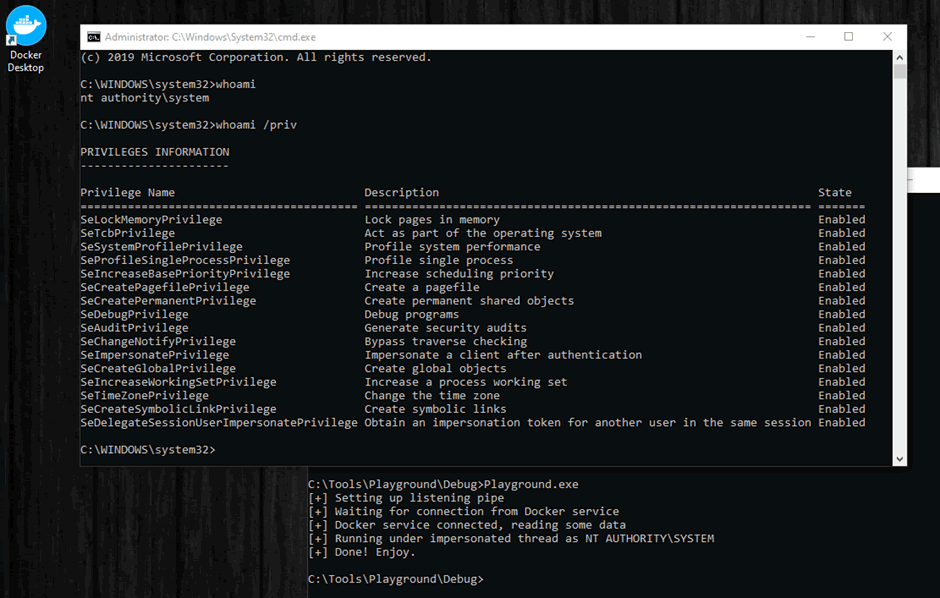

Once the pipe is listening, it’s just a matter of waiting for Docker Desktop to be started and connect to our malicious pipe. Once docker is connected, we impersonate the connecting client, which is SYSTEM, and launch a new process using the CreateProcessWithTokenW API.

Command prompt launched as SYSTEM:

Disclosure and Fix

When initially disclosing, Docker denied that the vulnerability even existed. Their stance was that impersonation is a Windows feature and that we should speak to Microsoft.

Whilst impersonation is certainly a Windows feature, when developing SYSTEM services that use named pipes as a client, it’s the developer’s responsibility to ensure that impersonation is disabled if such a feature is not needed. Unfortunately, the default behaviour when opening a named pipe as a client is to enable impersonation, which means the behaviour is often missed and overlooked.

After a few emails back and forth, then finally submitting a working PoC, Docker did agree that it was a security vulnerability and as such have now issued a fix. When the Docker service process connects to the named pipes of spawned child processes it now uses the SecurityIdentification impersonation level. This will allow the server end of the pipe to get the identity and privileges of the client but not allow impersonation.

- 25th March 2020 – Details sent to Docker security team

- 25th March 2020 – Docker respond and do not consider it a security issue

- 26th March 2020 – Additional clarification sent to Docker to describe the risk

- 26th March 2020 – Docker respond suggesting we speak to Microsoft

- 26th March 2020 – Further clarification sent, describing Docker connecting as a named pipe client and not a server

- 26th March 2020 – Docker respond indicating that it will now be looked at by the development team

- 30th March 2020 – Requested a status update on whether Docker will be treating the report as a vulnerability

- 1st April 2020 – Docker respond, indicating that the report is still under discussion, but requested the PoC code. PoC code sent.

- 1st April 2020 – Docker attempt to run exploit using standard account without SeImpersonatePrivilege and indicate exploit failed.

- 1st April 2020 – Instructions sent on how to run the PoC with an account that has SeImpersonatePrivilege

- 1st April 2020 – Docker confirm it will be treated as a vulnerability

- 2nd April 2020 – Fixed pushed to Edge release of Docker and CVE-2020-11492 assigned

- 11th May 2020 – Docker release 2.3.0.2 which includes the fix for CVE-2020-11492