We do a LOT of disclosures, probably starting one a day on average. Between us, we spend a man day or so per week just managing disclosures.

It creates pain for us and consumes time, particularly when the vendor won’t listen. We get the occasional legal threat, which takes time and money to slap down.

How do we find vulnerabilities?

We find vulnerabilities when on client tests. Clients usually ask us to manage the responsible disclosure process for them.

We find stuff when carrying out independent research.

Sometimes people ask us to manage a disclosure for them.

So why do we do it, if it’s painful and costly?

Without a stick of some form, some manufacturers simply won’t bother with security. Whether that stick is bad PR, regulatory enforcement or otherwise, without an incentive to behave, why bother?

There isn’t enough research going on, so hopefully our work will inspire more people to do the same.

It’s good for pushing our own boundaries and tech skills.

Occasionally it generates business for us, but rarely on a scale that would even cover the costs of the research.

As a result of research, lawmakers and regulators sometimes come to us for guidance. It’s good to be able to help them design regulations that actually have the desired effect.

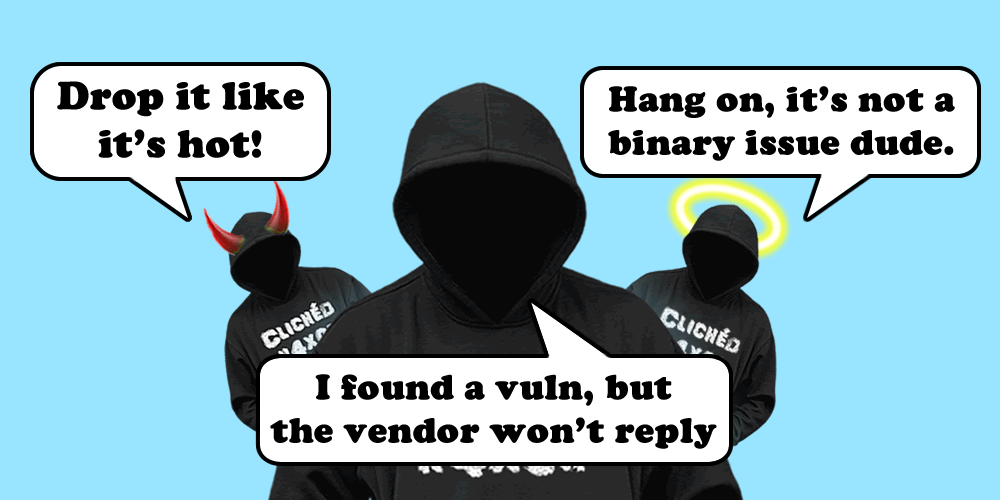

Ethical dilemmas

Along the way we’ve encountered all sorts of ethical dilemmas with disclosure, which have generated extensive discussions internally around how to manage them responsibly.

The answers aren’t clear-cut.

Vendors often make the fatal assumption that we are the first people to find the vulnerabilities, or that vulnerabilities will only ever be found by responsible researchers.

That’s simply not the case. Occasionally, we stumble on indicators of compromise, showing that we weren’t the first to find an issue.

All too often, the vendor is more concerned about protecting their reputation than protecting their customer’s data.

“We take security seriously” often translates to “We take our reputation more seriously than the security of our customers data” when a vendor is dealing with a disclosure.

We’ve had multiple cases where a vendor could have taken a non-critical service down and protected customer data immediately, but instead left the service up and data exposed whilst they continued to generate revenue and tried to keep us quiet.

That rarely ends well…

Regulations such as GDPR don’t help the researcher either – without evidence of a breach, there’s no reporting requirement.

Some ethically challenging examples:

Kids tracker watch vendor

Vendor X gathers kids data. We reported this privately to them, they fixed the single issue we identified. Related issues are already published in the public domain. We pointed them towards several third parties that could help them solve their wider security problems.

A year later, we find a related issue. We report it to them, they fixed the single issue.

Another year later, we find a related issue. They clearly haven’t listened to prior advice to get some decent 3rd party security advice and have no intention of changing behaviour.

Question: do we drop it as an 0-day?

Which risk is worse?:

Risk A: we publish the vulnerability, exposing a route to find kids data.

Or

Risk B: we don’t publish, but the data is already exposed and is found by a less ethical actor.

Variable: vendor takes all affected systems offline, but only after we publish. Prior to this they have refused to do so and we don’t know in advance what actions they will take on public disclosure.

Irresponsible businesses have failed as a result of breaches. Would we be better off without this vendor?

ICS telemetry vendor

Vulnerability reported to vendor involved in remote outstation telemetry, exposing industrial controls to trivial compromise.

Vendor responds indicating that the product is out of support, so washes their hands of any responsibility for vulnerabilities in their code.

~300,000 of these on the public internet, all could be remotely compromised.

Which risk is worse?:

Risk A: unethical actor finds the issue first and exploits it, compromising thousands of utilities.

Or

Risk B: we drop the vulnerability publicly, operators rush to take exposed vulnerable systems down.

Variable: the vendor has no control over the remote devices, so cannot take any action other than publishing firmware updates and alerting customers.

Cruise ship software

A cruise ship software vendor has issues that allow anyone on board with access to any network (even customer Wi-Fi) to compromise some critical systems.

The vendor claims they need 12 months to fix the issues.

Risk A: someone on board or on shore finds the issue in the meantime (it isn’t difficult!), steals customer and card billing data, or hijacks ship control systems (yes, really).

Or

Risk B: we drop the vulnerability publicly, system is taken down, causing significant disruption to customers of the software vendor.

Smart parking clamp

A smart parking clamp vendor is exposing S3 buckets and has IDORs that expose the data of those clamped. It’s also trivial to unlock any clamp.

They finally respond to email after a 3rd party helps us with some ‘leverage’. The S3 bucket issue is quickly acknowledged and fixed. The IDOR is not fixed. 3 months & multiple unacknowledged emails later, it’s still not fixed.

Should we publish?

Risk A: we don’t publish, but data around clamped vehicles and drivers continues to be exposed.

Or

Risk B: publish the vulnerability, vendor finally takes action, their revenues are interrupted whilst the service is down and a fix is implemented.

Variable: this particular clamp had a lot of attention in the press previously, so there are many parties out there who have already ‘tinkered’ with it.

Medtech device

I’m including this one only as an example of some of the extreme ethical edge cases we see from time to time.

We found an API exposed on the public internet. The methods were published too.

After investigating and researching the vendor, we realised it was a smart medtech device. Further digging revealed that the API contained patient stats, from which medical decisions would be made automatically.

Tamper with the stats and you kill people.

Risk A: keep quiet, pray the vendor responds and no-one else finds the issue in the meantime.

Or

Risk B: drop it publicly, someone else kills people using the API, either not realising or not believing the findings.

Yeah, there was no decision to be made there. Fortunately, the vendor replied within minutes and fixed it within an hour.

Conclusion

Ethical and responsible disclosure decisions aren’t as clear cut as they often first appear. When you’re working through over 200 disclosures a year, you’re going to get some that don’t fit the ‘norm’.

We feel that the primary driver for disclosure should be to protect the consumer. This may require vendors to change behaviour and actually ‘take security seriously’ rather than pay lip service to that phrase.

It may require some vendors to take some very bitter medicine too. We’ve seen numerous vendors go through change, from dealing badly with researchers and disclosure, to having excellent interaction with them.

Be a good vendor.