Introduction

This guide deals with threat modelling and early stages of development so that security issues and controls are identified before committing to manufacturing. Current attack methods, and the pitfalls we find in embedded designs, have been highlighted so that a finished product is as secure as it can be. This also makes testing and validation straightforward.

This guide is not just for technical developers, but for project managers and business analysts involved in product creation. Security is not binary or absolute. Business decisions will need to be made as to whether extra costs are worthwhile in a secure software development life cycle.

Table of contents

1. Threat Modelling

1.1. Why threat modelling is important

1.2. Ten-Step Design Cycle

2. Frameworks

2.1. IoT Design Frameworks

2.2. Legal Frameworks

3. Cryptography

3.1. Transport Layer Security (TLS)

3.2. Public Key Infrastructure (PKI)

3.3. Signing

3.4. Secure Boot

3.5. Cloud Onboarding

3.6. Algorithm Selection

3.7. Random Number Generation

3.8. Identity

4. Hardware Interfaces

4.1. Serial UART

4.2. JTAG / SWD

4.3. SPI and I2C

4.4. SD and eMMC

4.5. eFuses And Code Readout Protection

5. User Interfaces

5.1. Wi-Fi

5.2. Ethernet

5.3. BLE

5.4. NFC / RFID

5.5. USB

5.6. General Considerations

6. Microcontroller Selection

7. Code Quality

7.1. Cloud CI/CD

7.2. Coding Best Practices

7.3. Releases

8. Cloud Ecosystem

9. Mobile Applications

10. Validation And Testing

11. Conclusions And Checklists

1. Threat Modelling

![]()

Threat modeling works to identify, communicate, and understand threats and mitigations within the context of protecting something of value.

A threat model is a structured representation of all the information that affects the security of an application. In essence, it is a view of the application and its environment through the lens of security.

Threat modeling can be applied to a wide range of things, including software, applications, systems, networks, distributed systems, Internet of Things (IoT) devices, and business processes.

![]()

OWASP https://owasp.org/www-community/Threat_Modeling

1.1. Why threat modelling is important

Generally, it is better to follow the “shift left” principle when developing for embedded devices. Shifting left means identifying potential issues as early in a development cycle as possible, and the reason for this is that embedded devices are an intersection of software and hardware.

For example, if the output of threat modelling is that a secure element (SE) is needed, or AES cryptographic algorithms are required, and the design hardware doesn’t have these features then it can be difficult to recover to a secure product.<

Threat modelling seeks to break down a product into constituent components and assets, identify potential attackers and their goals, develop attack paths, and then calculate and treat these risks. There are a number of threat modelling techniques and frameworks, and some can be more appropriate than others.

1.2. Ten-Step Design Cycle

Figure 1: Ten-Step Design Cycle

This ten-step model is derived from the OWASP threat methodology (https://owasp.org/www-community/Threat_Modeling_Process) and is intended to be a constant process of cyclical design improvement.

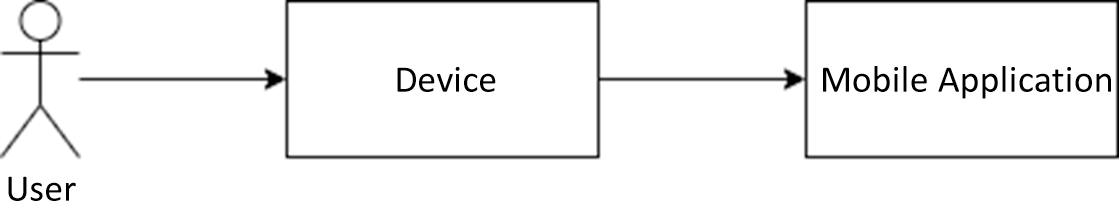

Step 1: Describe design

As early as practical in the product lifecycle a full description of the device and its intended usage should be made available. Schematics, code outlines, dependencies, and bills-of-materials should start to be presented even if they are not yet set in stone.

User stories and use cases can be helpful at this step to describe what an eventual device would look like.

Granular identification of all elements is not necessary, but Step 1 should include broad components such as the device itself, mobile applications, cloud services, etc.

Figure 2: Example high level design description

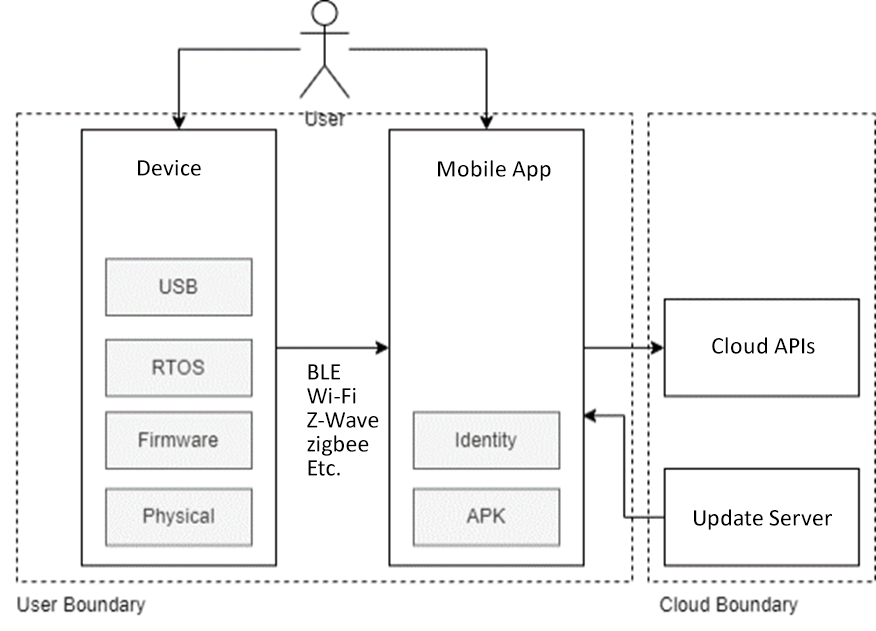

Step 2: Decompose

Step 2 starts to add more detail to the skeletal design from Step 1. This will seek to understand the device and how it interacts with all external entities. By identifying all entry and exit points, data flows, and external dependencies this can help identify areas that are potentially susceptible to an attacker.

Trust boundaries should be added around groups of the same security attributes (e.g., cloud components, or items that are within the physical control of an attacker) which can later be used to apply requirements and controls.

Figure 3: Example decomposed system

Step 3: Assets and goals

This stage looks to determine what is of value in the system, and hence what an attacker’s goals might be. There is no concrete method to follow as it will rely on contents of the decomposed design from Step 2, but typical examples might include the following:

- Intellectual property in the device firmware.

- Cryptographic keys on the device or pod.

- A user’s login identity from the mobile app.

- Steal keys or data to make counterfeit pods.

- Deploy malicious firmware.

- Target users via cloud APIs.

- Obtain admin access to cloud platforms and services.

- An insider sells access to software or keys.

- An insider inadvertently publishes customer data to a public cloud storage bucket

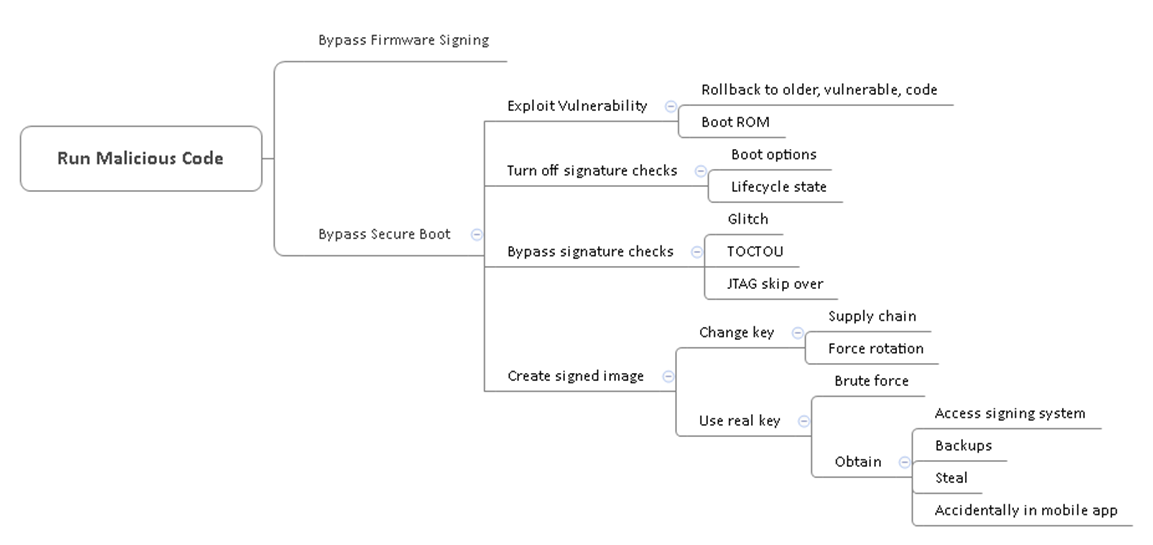

Step 4: Develop attacks

For each of the goals in Step 3, create attack trees which start with the generic threat and lead to specific vulnerabilities. Mind maps can be a good way to collaboratively develop this phase within a threat modelling workshop.

Figure 4: Example attack tree for running malicious code

Step 5: Derive security requirements

This is often where business policies and organisational requirements start to factor in: Is there an obligation to protect intellectual property (IP) in the firmware of devices?

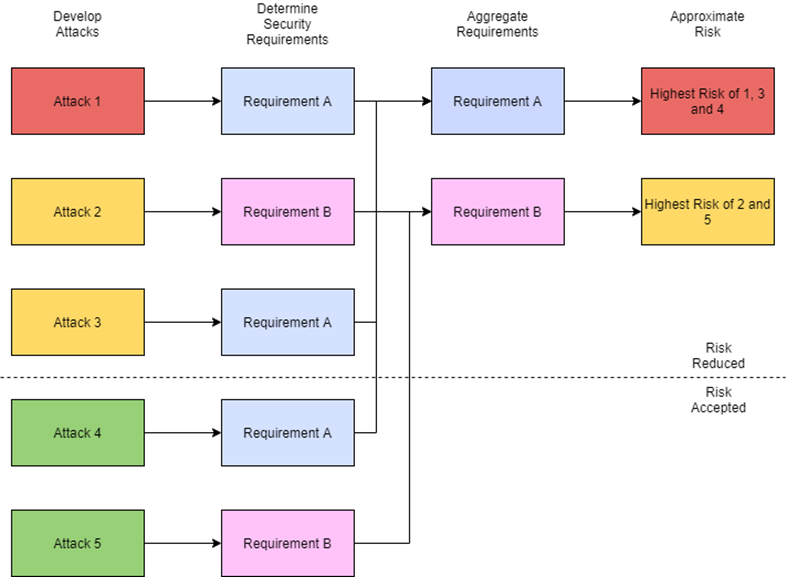

Step 6: Aggregate

Once the security requirements in Step 5 have been decided, they can then be mapped to goals and attacks from Steps 3 and 4. For example:

Goal/Attack: Third-party pods are unsafe, so only allow genuine pods to be used:

Requirement A: Protect security keys

Requirement B: Only run signed firmware

Goal/Attack: Stop counterfeits or clones, so protect firmware IP:

Requirement B: Only run signed firmware

Requirement C: Disable debug access

Goal/Attack: Follow local regulations for personal data, so secure cloud accounts:

Requirement D: Follow OWASP Top 10 in web applications

Requirement E: Follow CIS benchmarks for cloud hardening

There will now be an overlap of different attacks and security requirements which will start to create an overall aggregate, smaller, set of requirements. In the sample above Requirement B is shared by two attack chains.

Figure 5: Security requirement aggregation

Step 7: Calculating risk

There are many different techniques for calculating the potential risks, and each of these metrics have their advantages and disadvantages. What is important is that there is consistent application of them within a design lifecycle that works for the business.

CVSS

CVSS is typically used to score an identified vulnerability, and as such is frequently used when vendor patches are released. There are different algorithms (3.1 is the latest stable version) and a weighting is assigned in different areas – for example does the vulnerability require an attacker to be physically present, or can they be situated anywhere on the network.

CVSS is less frequently used during design phases of embedded devices as a vulnerability must exist, it doesn’t measure risk directly, and doesn’t help with any future likelihood.

Figure 6: CVSS risk ratings

Risk Matrix

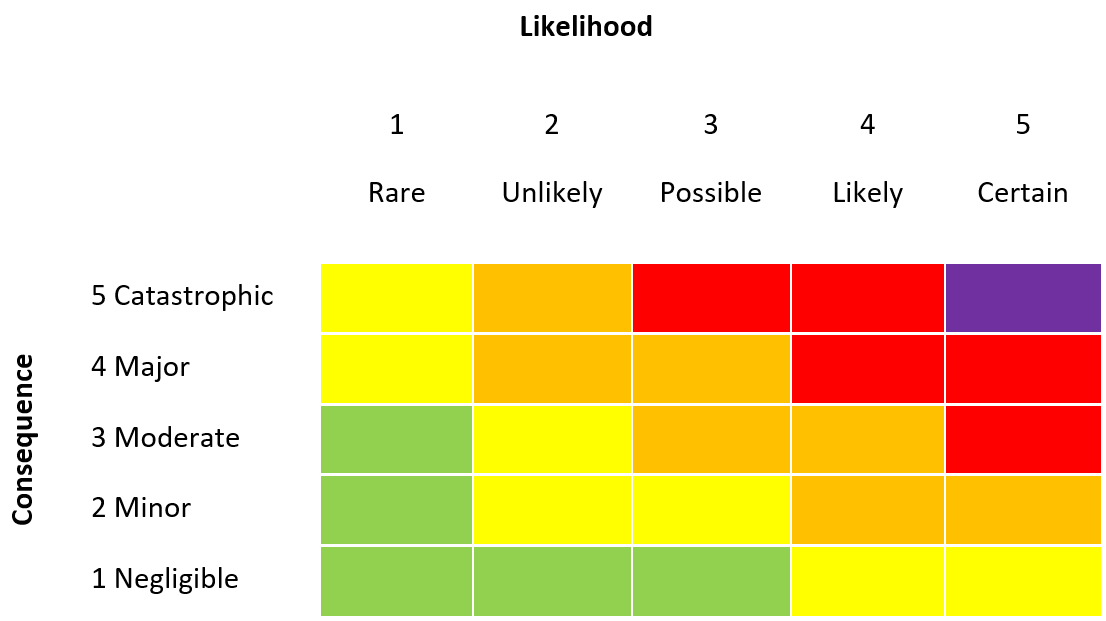

The consequence versus likelihood risk model:

Figure 7: Risk matrix

Whilst this does give a good approximation, the values can be somewhat subjective, and they are more open to interpretation (as there is no algorithm behind it). This risk model is commonly used in IoT and cloud systems and is perfectly acceptable for most organisations if there is consistent application of the consequence and likelihood metrics. It may be that actual numbers need to be applied: “catastrophic” equates to $10mn loss, “certain” means a chance of this happening in the next month etc.

STRIDE

STRIDE was created by Microsoft, although it has fallen out of favour. It is a mnemonic for classifying vulnerabilities rather than assigning risk per-se, but is still helpful when thinking about attacks and risks.

- Spoofing

- Tampering

- Repudiation

- Information Disclosure

- Denial of Service

- Elevation of Privilege.

DREAD

DREAD is again a mnemonic but with a calculation behind it.

- Damage

- Reproducibility

- Exploitability

- Affected Users

- Discoverability

A value between 0 and 10 is assigned to each area, then an average taken to give an overall score out of 10. Many people find the ‘discoverability’ score to be highly subjective and leave it out entirely.

As an example, an authorisation bypass is found in a mobile application API that allows an attacker to discover and download age verification documents, including passports, for all users of the platform. An attacker threatens to release the data but the vulnerability is quickly fixed. This leads to regulatory disclosures to the Information Commissioners Office and fines for mishandling of sensitive data:

Damage = 9

Reproducibility = 10

Exploitability = 10

Affected Users = 10. (Ignoring Discoverability.)

Total = 39

Average = 39 / 4 = 9.75

Step 8: Treat risk

Regardless of the risk calculation method chosen, a business decision then has to be made around the value below which risks will simply be accepted (e.g. lows and mediums), and which will need mitigations, compensating controls, or security measures deployed (highs and criticals).

It should also be kept in mind that some attack chains can include several medium-only severities yet result in compromise if all steps were to be successful. For example: a user enumeration vulnerability, a weak password policy, and a lack of brute force protection and lockout can still lead to an attacker gaining access to an account. Each of the vulnerabilities on their own is not a direct risk, but combined together leads to an overall higher level.

Step 9: Implement security

From the aggregate security requirements, and those risks that need to be treated, this stage implements the security controls to address them.

For example, if a cryptographically secure identity is required on the device, then the security controls might be:

- Include a secure element.

- Use AES encryption.

- Cloud provision using PKI.

Step 10: Update design

The design, bill of materials, code, and implementation should now be updated to reflect the chosen security controls.

As this is a constant process, any changes or additions to functionality, or publicly disclosed vulnerabilities (for example code readout protection bypasses in a chosen MCU family) should cause the cycle to be revisited.

Manufacturer feeds for vulnerabilities should be sent to the development team so they can be made aware of any issues (if RSS is available this can be added into Teams directly), along with general awareness of current trends and techniques in microcontroller security.

2. Frameworks

2.1. IoT Design Frameworks

Many global jurisdictions are now introducing legislation governing connected internet-of-things (IoT) devices, with the aim of making them secure by design.

Presently much of this legislation is lightweight but the expectation is that this will increase over time.

As with many legal obligations there can be nuances and grey areas which PTP cannot directly advise on, and this section is therefore for information and consideration only; local legal advice should be sought before developing for or selling into a jurisdiction.

UK IoT code of practice (CoP)

The DCMS (Department of Culture Media and Sport) introduced the UK IoT CoP https://www.gov.uk/government/publications/code-of-practice-for-consumer-iot-security in 2018 and it was expected that this would be codified into law, possibly via the Online Safety Bill, but this is yet to happen.

The CoP includes the following recommendations for manufacturers:

- No default passwords.

- Have a vulnerability disclosure policy (VDP).

https://www.pentestpartners.com/security-blog/when-disclosure-goes-wrong-people/

- Have a software/firmware update mechanism.

- Securely store credentials and other sensitive data.

- Encrypt in transit.

- Minimise attack surfaces.

- Verify software integrity.

- Protect personal data (GDPR).

- Make systems resilient to outages.

- Monitor system telemetry.

ETSI EN 303 645

ETSI produced non-binding guidance for consumer IoT manufacturers in EN 303 645 https://www.etsi.org/deliver/etsi_en/303600_303699/303645/02.01.01_60/en_303645v020101p.pdf and this follows the UK IoT CoP with the addition of:

- Allow deletion of personal data.

- Make installation and maintenance easy.

- Validate input.

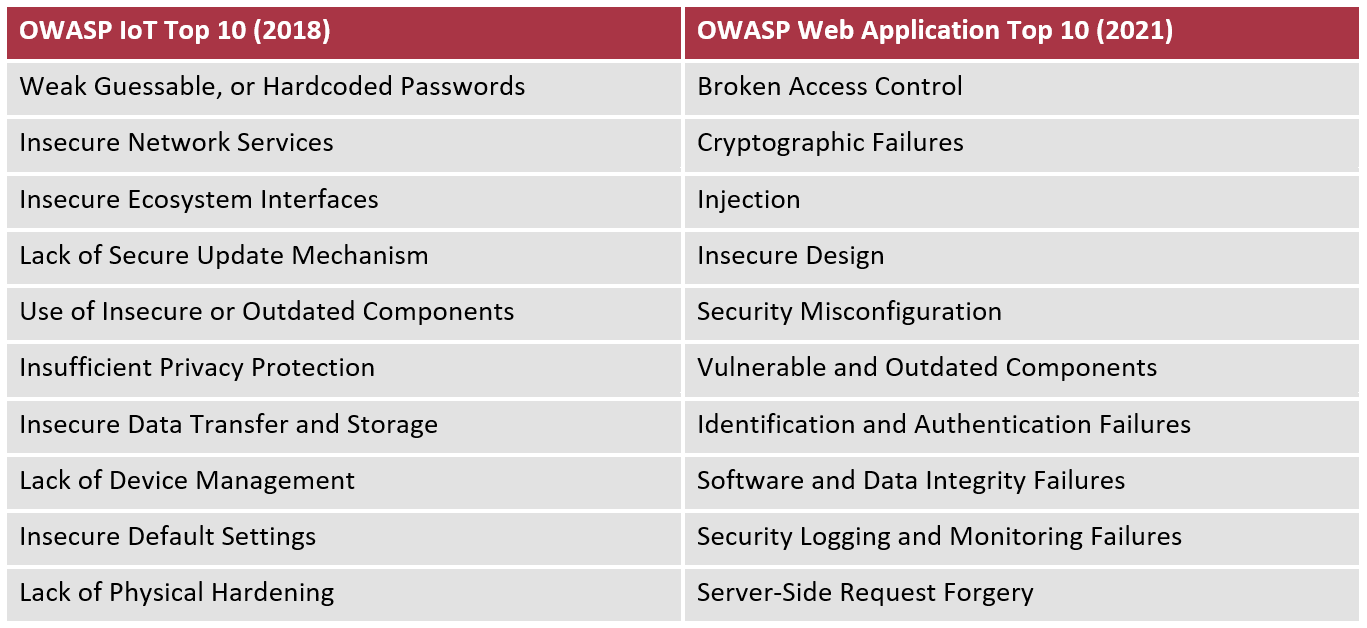

OWASP Project

OWASP https://owasp.org/ are well known for producing “Top 10” risks to various projects including web applications, APIs, and IoT, although the latter has not been updated for some time. These are the most exploited issues affecting these areas such as broken access control, and default credentials.

Table 1: Example OWASP project findings

2.2. Legal Frameworks

EU Cyber Resilience Act

The EU is introducing new regulations on products with “digital elements” https://digital-strategy.ec.europa.eu/en/library/cyber-resilience-act with the expectation that this will become law in 2024. Whilst still in a state of flux the security requirements listed in Annex I https://ec.europa.eu/newsroom/dae/redirection/document/89544 are broadly similar to UK CoP and ETSI EN 303 645.

3. Cryptography

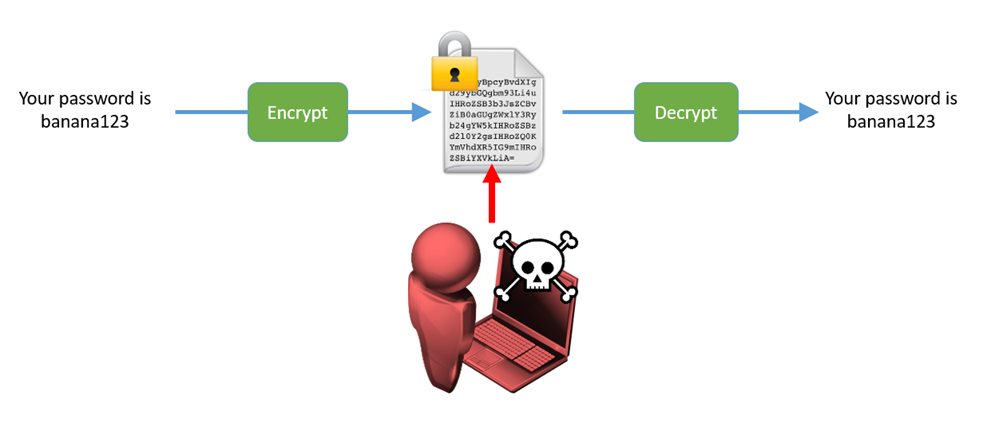

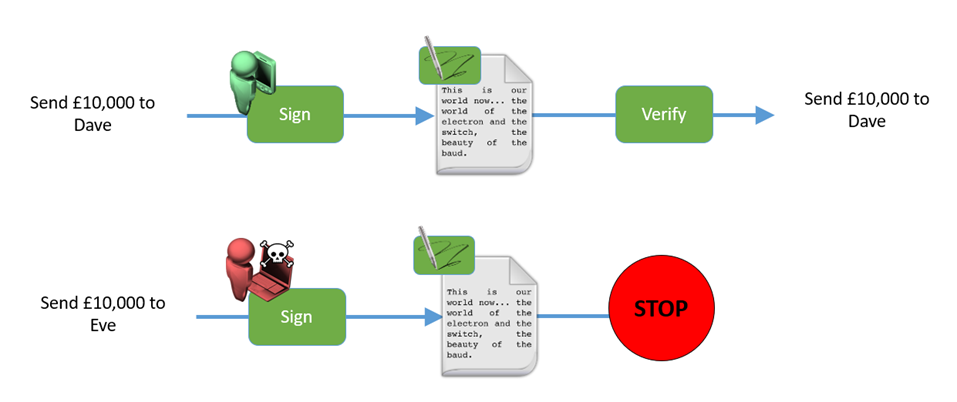

Cryptography can be used for two main purposes in embedded systems: confidentiality and authenticity.

Confidentiality stops someone from reading the content of a message:

Figure 8: Encryption and decryption. An attacker in the middle cannot read the plaintext of a message

Authenticity proves that a message comes from a genuine entity:

Figure 9: Authorising a bank transfer

Encrypting (confidentiality) and authenticity (signing) are frequently conflated.

Encryption is important when:

- Sending a password.

- Stopping competitors stealing intellectual property.

- Protecting users’ data.

Signing is important when:

- Unlocking a car with a key fob.

- Did that firmware genuinely come from the intended source?

- Did that data come from that device?

- Mutually authenticating between devices or servers.

However, in many cases, authenticity is often more important than confidentiality in embedded systems.

It is also a common meme in information security to “never roll your own crypto” – meaning that there are many public source algorithms (such as AES) that have been peer reviewed and are considered safe. Cryptography is an immensely complicated topic and creating an original mechanism is likely to have flaws in it. This is not to say that this should never be so, but all possible alternatives must have been explored first.

Figure 10: Kerckhoffs’ Principle

The 19th Century Dutch mathematician Auguste Kerckhoffs laid the foundations of modern cryptography by stating that a cryptographic algorithm should stand up to any kind of scrutiny, except for the private key. The same tenet can be applied more broadly to embedded systems: eventually, by some means, it is highly likely that the firmware will be recovered for a device.

Any system should be able to withstand an attacker statically and dynamically analysing a device and its code to reverse engineer its operation. Where secret material is relied on, to encrypt or verify data, these should be individual to a device so they cannot be reused to compromise other units or services.

Additional security can be provided by secure elements or enclaves (SEs), or trusted platform modules (TPMs). These are present on most modern mobile devices and computers and store card information or BitLocker encryption keys.

3.1. Transport Layer Security (TLS)

TLS is a cornerstone of the modern web and for web applications and mobile APIs the advice is fairly straightforward: use the strongest protocols and suites possible https://wiki.mozilla.org/Security/Server_Side_TLS. This means using TLS 1.3, perfect forward secrecy (AES GCM mode, CHACHA20_POLY1305), and elliptic curve certificates (P-256).

However, in practice not every single client will support such strong ciphers and some accommodation may need to be made for older versions of Android for example. Understanding the distribution of legacy clients is important for some markets – too high a security level and the number of users is limited, too low and vulnerabilities may be present.

Whilst the above is true for modern operating systems, the same cannot be said for many low power embedded devices. To save space and power, many MCUs do not have support in hardware for computationally expensive algorithms like AES. ARM Cortex v8 and later processors support AES but those prior do not https://en.wikipedia.org/wiki/AES_instruction_set#Hardware_acceleration_in_other_architectures, for example.

Lower bandwidth protocols like BLE (see 8.2 BLE) may also struggle with a full TLS handshake and it is better to use the bonding mechanism inbuilt.

TLS itself is therefore not the solution for all encrypted communications over untrusted carriers but should be a default starting point, especially for mobile application to cloud communications.

3.2. Public Key Infrastructure (PKI)

PKI is related to TLS in some ways, in that it is used to prove the authenticity of a remote server. There is little point in allowing a web browser to connect to an attacker who can decrypt and re-encrypt on the fly, when using internet banking.

PKI uses a hierarchical model of trust: “root” certificate authorities are baked into browsers, who in turn issue signing capabilities to “intermediate” certificate authorities. A device then generates a public and private keypair, and submits the public key for signing by the intermediate which establishes this chain of trust.

There is nothing to say that a private PKI cannot be used – enterprises frequently have their own certificate authorities that attest against internal certificates. As long as there is a chain of trust, browsers will still see these as valid. Embedded devices will also need to be told what root certificates are valid, otherwise they will often blindly trust any certificate presented to them by an attacker.

Private PKIs are used in aerospace to sign software on aircraft known as loadable software aircraft parts (LSAPs). On safety critical systems it is vital that firmware comes from a known good source.

3.3. Signing

Signing is typically used in IoT in two main ways: to prove data came from a device (e.g. temperature readings from a connected thermostat), or to prove that a firmware update has come from an official source.

In the first case, techniques such a hash-based message authentication code (HMAC) https://en.wikipedia.org/wiki/HMAC are used to generate a one-way hash of a value and a secret key. The secret key is shared to both parties so given the value (“it is 20C”) the same HMAC can be computed to prove the sending party knew the same secret key.

Firmware signing should typically use PKI. When the firmware file is created, a private key is used to generate a signature. When being loaded, a public key can be used to check against the expected signature. If the two match, then all is good, and the firmware can be loaded. If the two differ then the firmware has been modified and should be rejected.

Frequent mistakes have been found in firmware verification processes in the past that do not use PKI:

- CRCs (cyclic redundancy checks) use a known algorithm to compute a value over part or all of a file, however an attacker can simply modify the firmware and recompute the CRC alongside it. CRCs give some assurance of integrity in transmission, but little else, and should not be routinely used.

- Other implementations PTP have seen use a password protected zip file to encrypt firmware contents. The running software on the device has a copy of this password and can then extract and apply the contents. This means that all devices share the same password and, if obtained, it means that an attacker can simply create their own passworded zip. Recovering from a disclosure like this is extremely difficult as it would need all devices’ update mechanisms to be changed near simultaneously.

3.4. Secure Boot

Related to secure firmware updates, secure boot verifies that a firmware blob has been signed in a similar way at each and over boot, and chains back to a valid certificate authority or private key (as the device will be offline to verify a whole chain).

Embedded Linux devices typically have multiple stages to boot, starting from a first stage boot ROM, handing off to a second stage like U-Boot, before starting the full Linux kernel. Secure boot can be applied to some or all of these stages. Some STM32 families (and other ARM SoCs) also support secure boot through ARM TrustZone (https://documentation-service.arm.com/static/5fae7507ca04df4095c1caaa?token= opens PDF).

Secure boot stops an attacker from replacing parts of the non-volatile memory with their own, to gain further insight into a running system or to bypass other security features. Each stage should valid the next.

Vulnerabilities like time-of-check-time-of-use (TOCTOU) and other bypasses in signing and secure boot have been found and it is important to check for the presence of unfixable issues during development, and in ongoing awareness.

3.5. Cloud Onboarding

When manufacturers have massive fleets of IoT devices to enrol into a cloud platform (typically cameras or smart speakers) a light-touch or zero-touch process is required https://learn.microsoft.com/en-us/azure/iot-dps/about-iot-dps. The IoT device is provisioned in the factory with a base identity certificate. When it connects to the cloud for the first time, it is provisioned, and per-device certificates are issued such that the device can now prove its own identity and tie that to a user’s account.

This process generally requires supported architectures and SoCs that have approved base certificates pre-installed.

3.6. Algorithm Selection

AES and SHA-2(56) are the most used cryptographic algorithms, however AES is a family of different ciphers for different purposes, and some are no longer considered safe to use.

ECB (electronic code book) and CBC (cipher block chaining) both have flaws which can lead to recovery of plaintext. This is famously demonstrated in the ECB encrypted “Tux” penguin https://en.wikipedia.org/wiki/Block_cipher_mode_of_operation#Electronic_Codebook_(ECB):

[email protected] Larry Ewing and The GIMP, CC0, via Wikimedia Commons

Figure 11: Tux ECB from Larry Ewing

AES GCM (Galois/Counter Mode) should be the default option for symmetric-key encryption. Many lower-powered devices may need to use hardware support of the correct cipher modes to process this quickly enough, but in many other cases software implementation should be adequate.

The hashing algorithms MD5 and SHA-1 are now deprecated although significant processing power would be needed to achieve a collision (where an attacker creates their own file but with the same signature). For high value targets and nation states this is within current reach, so the advice is now to use SHA-2 (SHA-256) instead.

3.7. Random Number Generation

A cornerstone to many cryptographic algorithms is the use of a random number to add additional entropy, or as part of a challenge and response handshake. Whilst no number can be truly random, cryptographically strong pseudo random number generation (PRNG) is built into many operating systems and MCUs https://www.arm.com/products/silicon-ip-security/random-number-generator.

These features and libraries should be used where any cryptography is used. Insecure functions like rand() must not be used.

3.8. Identity

When devices identify themselves to the cloud, this should be in a secure manner to prevent spoofing and impersonation. Many manufacturers use MAC addresses, serial numbers, or other incrementing values that are easy to guess. Common attacks involve substituting the identity to access other user’s data or to inject malicious data.

Device identity should ideally be supplied as a certificate and key which is stored in a secure location (such as a secure element or TPM) on the device. Other mechanisms are possible but need careful consideration.

4. Hardware Interfaces

The aim of a local attacker is twofold: recover static firmware from the device, and observe the device dynamically running either through a shell or via an attached debugger.

Embedded systems in the hands of a user often have debugging or programming interfaces available, even if they are not overtly labelled as such. The first goal is therefore to identify any potential headers, test points, vias, or other routes into these interfaces.

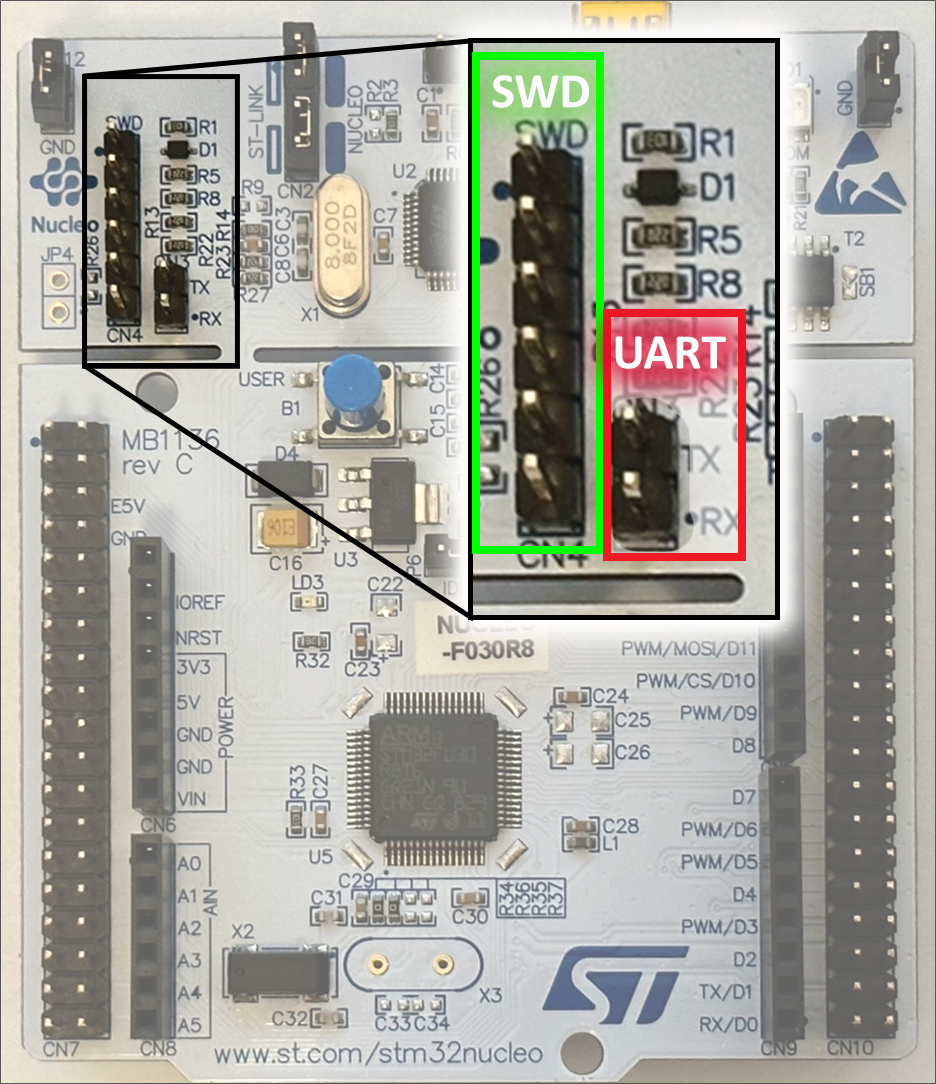

Figure 12: STM32 Nucleo development board – ST Link at top, ARM MCU at bottom

4.1. Serial UART

Serial UART is a common way of programming STM32 and Arduino devices, especially during development phases. Although Figure 12 above has a labelled header present on the board on production devices this is generally not so. Even if test pads are not present, referring to a datasheet will often give the pinouts that UART is expected on and so can be traced from there.

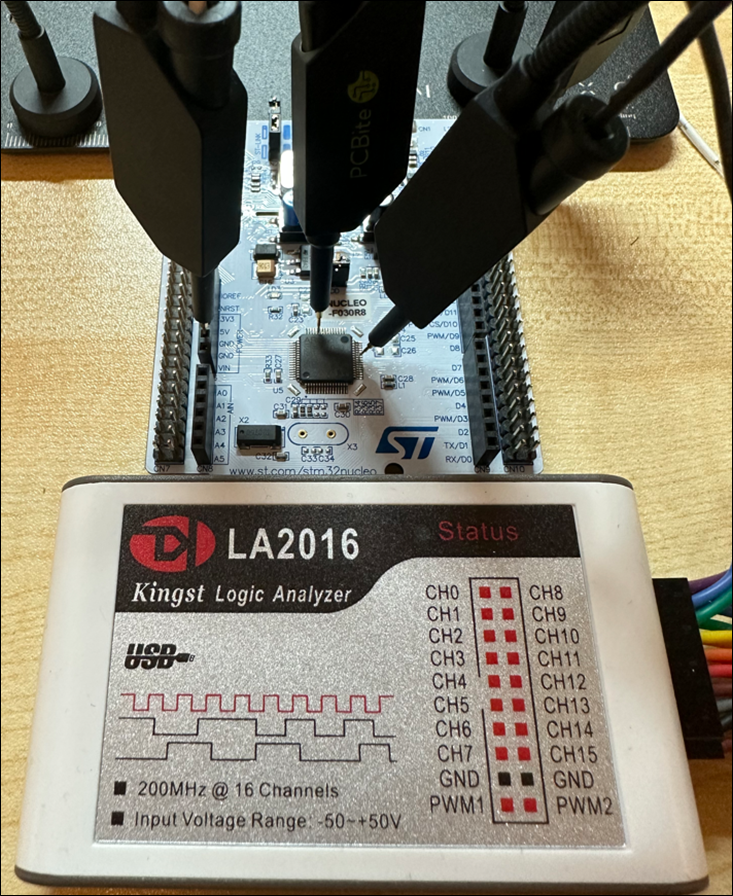

UART can be found with a logic analyser very easily. Logic analysers can be connected to multiple pins simultaneously and attached software can interpret the signals on each pin.

Figure 13: Logic analyser and probes

On ARM devices serial access may permit code readout from the internal flash, whereas on a more fully featured embedded Linux this may give an interactive shell.

4.2. JTAG / SWD

Unlike serial UART which uses only two lines (plus ground), JTAG uses five pins. It is also an active protocol which needs signals to be sent in before responses are sent back. Locating JTAG on an unlabelled board can therefore be a time consuming and frustrating task.

- TCK: Test Clock

- TMS: Test Mode Select

- TDI: Test Data-In

- TDO: Test Data-out

- TRST: Test Reset (Optional)

JTAG does however give much lower-level access to the microcontroller and other peripherals on the board. Readout of firmware and memory contents can be carried out, and a debugger attached to set breakpoints, modify registers, and even to skip checks.

JTAG is therefore a powerful tool for reverse engineers to understand how a device works and to circumvent restrictions like firmware signing or to modify boot behaviour.

Serial Wire Debug (SWD) is just a modification of JTAG specifically for ARM processors. SWD puts two pins (TMS/SWDIO and TCK/SWCLK) on top of the JTAG pins allowing a user to use either JTAG or SWD without the need to breakout more pins.

Although generic JTAG adapters like the Segger J-Link exist, many manufacturers have layered complexities on top of JTAG (such as sJTAG and cJTAG) and as such require specialised adapters to connect to a target. Segger’s software also has some support for a wide range of microcontrollers, as does OpenOCD, but sometimes a chip manufacturer’s own software like Xilinx’s Vivado is needed to interact.

4.3. SPI and I2C

SPI and I2C are both board-level, short range, serial-like protocols.

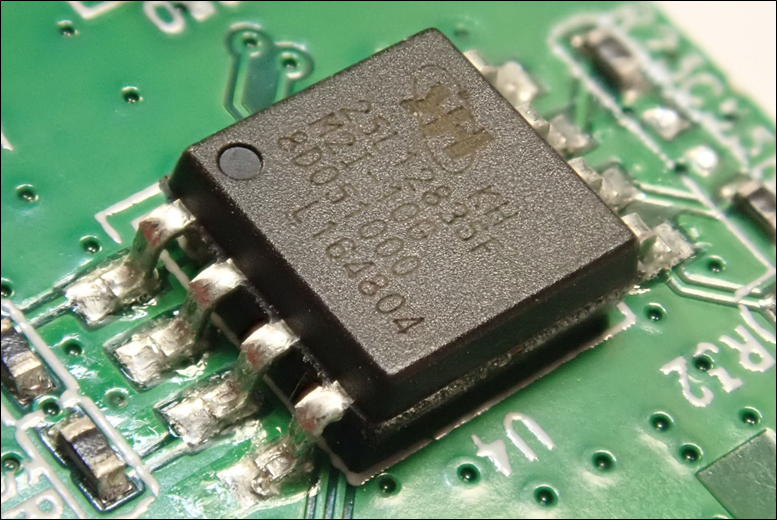

SPI is most used for connecting to external flash and is therefore attractive to an attacker seeking to recover firmware.

Figure 14: SPI flash in a SOIC package

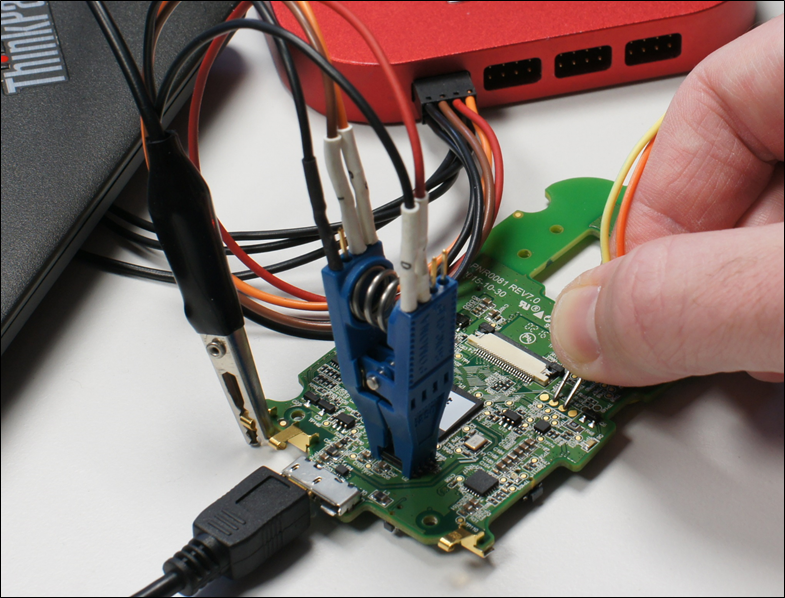

SOIC packages are easy to connect to with a clip device, and then a tool such as a Tigard or FT2232 can be used to connect and read the memory contents using flashrom:

Figure 15: SOIC clip in blue

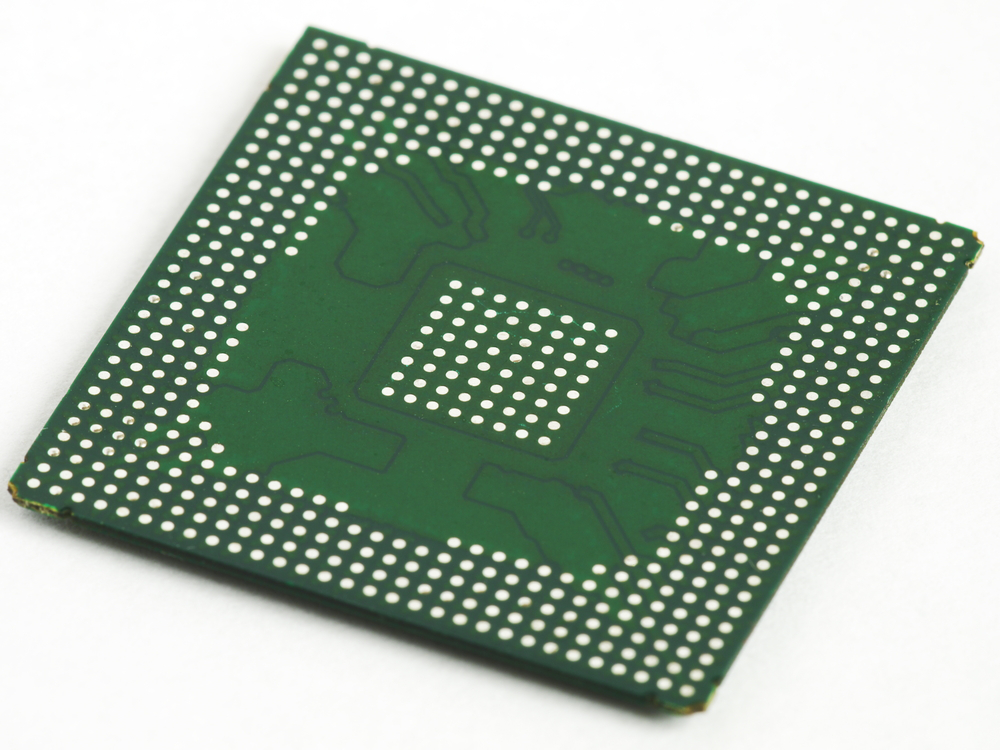

Recovering memory content from ball grid array (BGA) packages can be more challenging as there is nothing to clip onto from above the chip. However, the chip can be removed using a hot air rework tool and sent away for specialist re-balling and then read using a programmer.

This is typically a more time consuming and expensive process, and less accessible.

Figure 16: BGA solder balls underside

I2C is a slower, 2 wire, protocol more often used for connecting things like touchscreens and sensors, although some memory types do still use it. The same techniques as above can be used to intercept and read I2C lines.

4.4. SD and eMMC

SD and eMMC are related storage technologies, and both are more predominantly found on embedded systems that run a more Linux-y operating system but can still be found elsewhere.

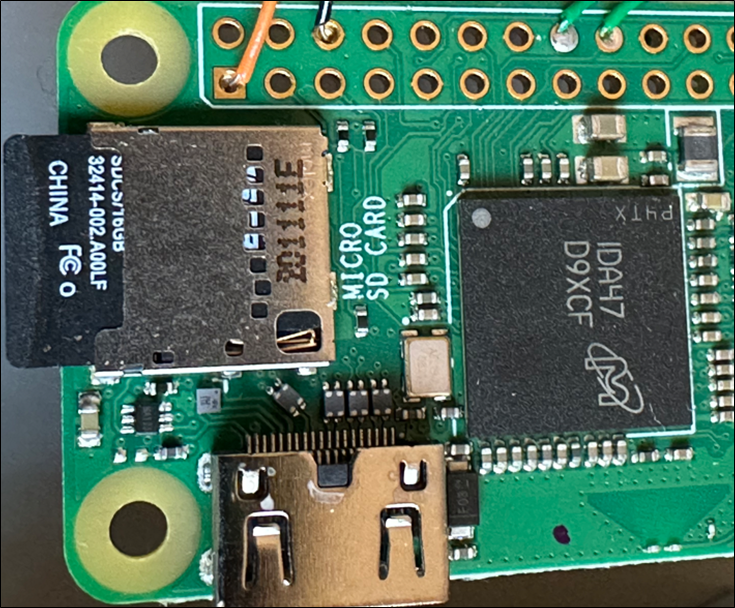

SD contains the main filesystem on Raspberry Pis for example and as it is (intentionally) removable, an attacker with physical access can simply remove the SD card to recover the full firmware, or modify files and add their own root-level user. Although Pis do support a form of secure boot, in PTP’s experience it is rare to find this in use.

Figure 17: Raspberry Pi Zero W with SD card at left

Although Raspberry Pis have a reputation of only being a hobbyist device, PTP have found them in many retail devices including electric car (EV) chargers and industrial routers.

In PTP’s opinion they are unlikely to be sufficiently hardened against an attacker with local access and should usually be avoided on production devices.

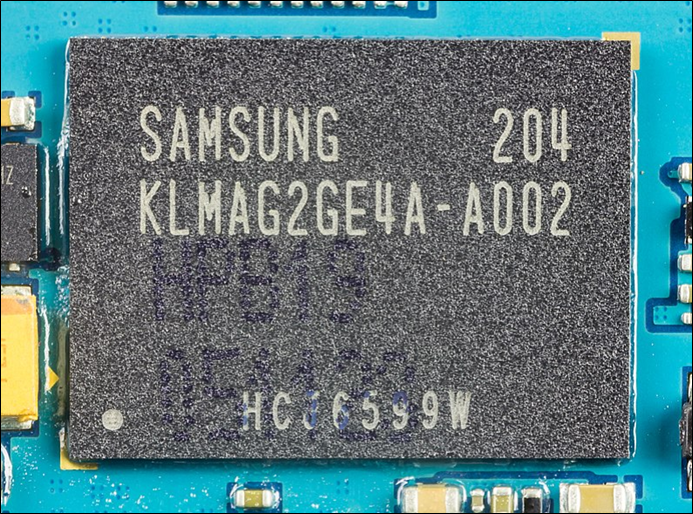

eMMC devices are very similar but packaged in a BGA and soldered to a board. They have eight data lines rather than SD’s four, so are quicker to transfer data into and out of.

Figure 18: eMMC BGA package

As they are BGA, contents recovery is more time consuming than just pulling out an SD card, but they are also rarely encrypted so can yield useful results if there is sufficient motivation. As with other areas, they should not be relied upon to protect secrets.

4.5. eFuses And Code Readout Protection

Many microcontroller families including ARM Cortex, STM32 and ESP32 include code readout protection (CRP) features.

Once programmed in a factory an eFuse https://docs.espressif.com/projects/esp-idf/en/latest/esp32c3/api-guides/jtag-debugging/configure-other-jtag.html is “blown” or certain option bytes https://stm32world.com/wiki/STM32_Readout_Protection_(RDP) set which instructs the device not to allow reading or writing of memory, or JTAG/SWD capabilities. In some devices this can be reversed but should wipe the device’s firmware.

CRP bypasses have been, and continue to be, discovered for many microcontroller families. Vendors sometimes produce different product lines with varying declared resilience to techniques such as glitching and fault injection. Basic models with little protection are likely to be cheaper than security hardened ones for example.

As previously discussed firmware can be obtained by other means such as attacking cloud code repositories, or by targeting individuals for physical theft and so CRP should not be focussed on exclusively. Any CRP may grant access to some secrets, but these should only impact the device physically in front of an attacker and not any other device.

As part of microcontroller selection (see also Section 9 Microcontroller Selection) consideration should be given to:

- Whether a device offers CRP and disabling debuggers.

- If there are any existing publicly known CRP bypasses.

- Whether the vendor gives any future guidance to the resilience of CRP bypass.

- Testing to confirm documentation and assumptions.

5. User Interfaces

This section is primarily concerned with a user’s interaction with an embedded device from a smartphone or similar, rather than smartphone to cloud. Embedded devices can also communicate directly with cloud services.

5.1. Wi-Fi

Wi-Fi is sometimes found as a core feature of a particular microcontroller or as an ancillary chip (such as an nRF).

Wi-Fi can operate in one of two modes: a client or a host access point.

When configured as a client, this means the embedded device needs to store a user’s Wi-Fi SSID and passphrase securely on itself. Where the embedded device is less portable (such as an EV charger) the risks are lower, but for completely portable devices like smartphones where they can be more readily lost or stolen the risks are increased if an attacker can recover these details.

Websites exist to geolocate SSID names and given consumer ISP routers are often fairly unique this could tie a device to a general area. This is particularly serious if the user could be a victim of stalking or harassment.

More typically a device temporarily sets itself as an access point which is then connected to by a smartphone to configure it or update firmware. The access point SSID and passphrase should ideally be unique per device so that an attacker within local range cannot also access the device and recover any sensitive information from it. This may be more difficult if the device doesn’t have a screen to display the information on.

5.2. Ethernet

Products that include Ethernet should include protections for any services exposed and listening as this is physically easier to connect to and attack than Wi-Fi or BLE. Any USB ports should not unintentionally enumerate as a virtual Ethernet device (RNDIS).

5.3. BLE

BLE (Bluetooth Low Energy) is an evolution of the Bluetooth Classic (BR/EDR) standard, although there are significant differences. BLE should now be used rather than Classic so this section only focuses on BLE 4.

Key Concepts

- Central device: listens for advertising packets and initiates scan requests; typically, the smartphone.

- Peripheral device: a BLE device like a smartwatch which sleeps most of the time and only sends advertising packets when they need to exchange data.

- Pairing: a temporary connection to allow a short-term exchange of keys.

- Just Works: No verification, a central can just connect to a peripheral.

- Out Of Band: Some other verification, for example by NFC.

- Passkey: A 6 digit number is entered into both devices.

- Comparison: Uses the Just Works method but with a 6 digit value shown on both sides for the user to manually confirm is the same.

- Bonding: a long-term connection which avoids the need to re-pair each time.

- Generic Access Profile (GAP): defines how devices can connect and make themselves discoverable.

- Generic Attribute Profile (GATT): a list of services and characteristics exposed by the device.

Security Issues

Although BLE does encrypt communications using AES-GCM making passive eavesdropping impossible, if the “Just Works” temporary connection method is in use (as is very common) then active interception is possible by a local attacker as there is no verification of the devices either side.

Using stronger mechanisms like numeric comparison, or Out of Band, offers the highest security but this can be challenging to implement if the peripheral device has no screen. A unique 6 digit passkey per peripheral can be added on a sticker, or similar, but this adds manufacturing complexity and cost. If a screen is present however then this can be used to generate a random pairing PIN.

Temporary pairing also means that if a central device (such as a smartphone) disconnects (it is out of range or switched off) the peripheral can now be connected to by an attacker. As BLE devices tend to have a shared device name, vulnerable devices can be searched for and then connected to and controlled https://www.pentestpartners.com/security-blog/screwdriving-locating-and-exploiting-smart-adult-toys/.

Bonding offers greater protection and is often used on headphones to stop local attackers taking over what music is being played. The peripheral device is usually put into a bonding mode via a physical switch and thereafter can be used without interaction. Devices that pair each time they connect increases the risk of active or passive interception by a proximal attacker.

GATT and any exposed characteristics should also not offer any sensitive information to an unauthenticated paired connection. Some IoT devices uses BLE to set a WiFi passphrase from a smartphone application, but if care is not taken this can then be recovered by a local attacker. Similarly, BLE can be used to control IoT devices, and if a paired connection is available without further authentication an attacker could change the behaviour of a device.

5.4. NFC / RFID

Near field communication (NFC) and radio frequency identification (RFID) are in common use for payment cards and door access control.

Many different types of high and low frequency cards are available; and in this section are classed as passive radio devices in that they don’t use external power. Semi-passive tags are also available in that they need temporary energy from a card reader to power up a low power microcontroller inside the tag.

Hardware tools like the Proxmark3, and even software on Android smartphones can be used to interact with and clone RFID tags.

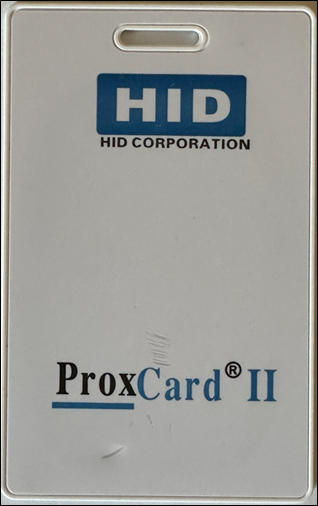

Low frequency HID ProxCard tags use a very small number of bytes on the card to store an ID value which can be trivially recovered and then copied onto a new card. These types of tag are not considered particularly secure but are still in common use in building access control.

Figure 19: HID ProxCard II

High frequency tags include the NXP-designed MIFARE range. The MIFARE Classic uses a proprietary encryption algorithm called Crypto-1 which has been broken. These cards use nested keys to protect other keys and given sufficient time can be brute forced. For cloning access control badges this is a valid attack vector, although simply stealing one is usually easier.

Later versions of the MIFARE DESFire use AES and DES/3DES cryptographic schemes which are stronger and not yet practically broken.

A MIFARE Classic 1k card can cost $0.50 – $1, a MIFARE DESFIRE is upwards of $3-$4. In volumes therefore this can add significant expense to disposable pod manufacturing.

5.5. USB

USB is usually present on development boards and enumerates as a serial port; the same behaviour should not be present in production devices. USB is likely to be present for charging and if possible, then only the voltage lines should be connected through into the device.

If firmware update capabilities are needed then this can be carried out over USB, or USB can be used to recover a device from a “bricked” state by giving access to the bootloader, often via a keypress sequence. If this is the case, then care should be taken that the bootloader does not permit code readout or other lower-level access. Many recovery protocols have hidden commands that may not be obvious even from a datasheet which could be discovered through USB fuzzing techniques.

If devices are sufficiently low cost, then having a firmware update or recovery mechanism may not be required. If USB data lines are needed then:

- The device should be checked for enumerated VIDs and PIDs: only the bare necessary of device types should be present (g. no mass storage, RNDIS, or similar).

- The bootloader and recovery process (DFU) that uses USB should be well defined and verified.

- Development features (like serial UART) should not be accessible in production.

- It should not be possible to fully reprogram the device and misuse aUSB port.

5.6. General Considerations

Any radio communication with an embedded device should be considered a potentially open and untrusted carrier that an attacker within local range may be able to intercept.

Defence in depth should be applied such that both the carrier (BLE, Wi-Fi) and the encapsulated traffic are encrypted.

6. Microcontroller Selection

At this stage a relatively well-formed requirements list should have been created to help drive microcontroller (MCU) selection which would include items such as:

- Security features

- Code readout protection

- Debugger locks

- Secure elements

- Resilience to future and current attacks (glitching)

- Cryptographic algorithms

- AES

- SHA-256

- Secure random number generation

- Peripherals

- BLE

- Wi-Fi

- Inputs (buttons and GPIO)

- Displays

Additional requirements are likely to include power consumption (battery anxiety is a key driver in adoption and satisfaction), supply chain availability, and cost per unit.

Security is not a binary or absolute, trade-offs will need to be made between complexity, usability, cost, reputational harm, safety, and intellectual property. Once a base hardware and software bill of materials has been drawn up, iterations of threat modelling can be used to refine a design depending on reprioritised risks.

Future requirements may also include zero or light touch connection to cloud IoT hub services, but this is out of scope at present.

Many cheaper options are available from less well-known vendors. Often these are pin-for-pin clones of other MCUs, and indeed fake STM32s are frequently found in the supply chain. Verifying legitimate ST MCUs from clones and outright fakes can be very difficult although some tooling exists https://mecrisp-stellaris-folkdoc.sourceforge.io/bluepill-diagnostics-v1.6.html.

GigaDevice are well known for supplying the GD32 equivalent of the STM32, which has some clock and storage speed advantages. The use of SRAM to cache some of the flash storage by the GD32 can lead to small boot-up delays and increased power consumption over the STM32’s pure flash. GigaDevice SPI flash chips are now very frequently found in many types of device.

The cheaper MCUs do typically work, although with a higher dead-on-arrival rate, and the supply availability can be intermittent. One-time-programmable (versus flash) devices may be cheaper still and bring some accidental security benefits.

The greater security concern with cheaper brands is often the toolchain and associated software. These are less likely to be signed, tested, supported, and developed to a high standard, and may well have fewer people who use them. Whilst containerising toolchains can help mitigate against internal compromise, there can still be a risk of compilers introducing untrusted or poor-quality code into final firmware.

In the longer run, poor quality toolchains and lack of support can often offset BOM cost savings, but a full business case analysis should be undertaken.

Programable SoCs (PSOCs) – a combination of MCU and a programmable logic device – can also be used to help accommodate last minute changes in design, like the Infineon ARM Cortex series https://www.infineon.com/cms/en/product/microcontroller/32-bit-psoc-arm-cortex-microcontroller/. These are specialist devices however.

7. Code Quality

Code should be written to a good standard, but issues like Serial UART and JTAG availability can lead to compromise, or more serious issues in a cloud-supported mobile application.

7.1. Cloud CI/CD

It is likely that whatever DevOps environment is being used (Azure, AWS, Google Cloud) it will have been created to facilitate multiple developers and eventual integration with third-party hardware teams.

Attackers wishing to steal intellectual property (IP), and frustrated by device countermeasures that prevent firmware recovery, are likely to switch their attention to the cloud code repositories and attack either the business itself or third-party employees (or both) to gain access.

Securing the DevOps environment through conditional access and multi-factor authentication is therefore important. Your staff will also likely have centrally managed devices from which to work, but this is unlikely to be so for third-parties. Technical and policy controls should be considered to manage the risk of downloading code and IP onto uncontrolled devices.

Using hardware-backed signing of commits is often used in Git to verify the committer (https://developers.yubico.com/SSH/Securing_git_with_SSH_and_FIDO2.html). With a robust DevOps setup, enforcing access controls would likely give the same level of assurance. However, if less trusted third-parties are given access and cannot use a corporately managed device, a device such as a Yubikey could be used for such contributors.

Pipelines and Secrets

We often find build pipelines used in DevOps environments with multiple stages and tests in place, which is all good practice. Variable groups and secure files inside the DevOps environment library means that direct access to passwords for remote storage and signing keys is impossible.

However, regardless of good practice and design Azure key vault or Amazon KMS should be considered for future use to ensure sensitive key material is securely stored at rest.

Care should also be taken when writing stage jobs that the logs do not reflect any secrets into them that could be observed by an attacker. Automatic scanning of repositories for secrets, along with periodic manual review, should also take place.

Roles, access, and permissions should also be managed to ensure third parties only see the repositories they need to. A concern to many is that third parties would be able to see or change sensitive information in pipeline YAML files (JB NOTE: YAML is not exclusive to Azure, and it supports comments where JSON does not). https://learn.microsoft.com/en-us/azure/devops/pipelines/security/resources?view=azure-devops#protected-resources Multiple options for restricting this include:

- Placing the YAML files in a separate repository or sub folder and using a lightweight project.yml template to include other YAML https://learn.microsoft.com/en-us/azure/devops/pipelines/process/templates?view=azure-devops#using-other-repositories

- Setting permissions to allow only a pipeline to access sensitive files.

- Require approvals before a pipeline can be run https://learn.microsoft.com/en-us/azure/devops/pipelines/process/templates?view=azure-devops#using-other-repositories

- Require approvals for specific deployment or pull requests https://learn.microsoft.com/en-us/azure/devops/pipelines/process/templates?view=azure-devops#using-other-repositories

Docker and Kubernetes

Where Docker and Kubernetes images are in use they can hold secrets. Image scanning should be performed not only for embedded sensitive material, but for any vulnerabilities present in libraries or other dependencies especially for those included from external public resources.

https://docs.docker.com/engine/scan/

https://snyk.io/learn/docker-security-scanning/

Breakout, pivoting, and lateral movement, particularly in containers running in the cloud, can lead to wider compromise.

SAST and DAST

Static application security testing (SAST) can be included in CI/CD pipeline stages along with other unit and functional testing. Multiple vendors offer different options which would typically flag unsafe uses of programming languages, such as the known insecure methods sprinf and strcpy in C, but in depth!

7.2. Coding Best Practices

Where real time operating system (RTOS) are in use rather than embedded Linux distributions, some areas are worth explicitly mentioning.

Memory management

C relies on the developer for allocating memory and pointers, rather than dynamically as in many abstracted languages. In RTOS memory should be allocated at compile time to avoid memory leaks.

Under and over flows, especially in “tainted” sources (external source of untrusted data), would normally lead to a denial-of-service condition. Strings are infrequently used in RTOS so attacks such as cross-script scripting are not directly applicable to the embedded devices themselves, only to mobile and web applications. Input validation should still be performed, however.

Type casting should be handled carefully.

With RTOS, compiler software protections such as ASLR, DEP, and NX are unlikely to be available to use.

Race conditions

RTOS do support multithreading and multitasking and although RTOS should be more time sliced than resource-shared, some “soft” RTOS can still have some jitter in process allocation. When two threads try to access shared data simultaneously this can lead to a race condition.

Atomic operations can be used in FreeRTOS https://github.com/FreeRTOS/FreeRTOS-Kernel/blob/main/include/atomic.h and should be preferred when usable for a particular function.

TOCTOU

Very similar to a Race condition, time-of-check-time-of-use (TOCTOU) vulnerabilities occur when data is modified after being verified but before being used. An example would be a secure boot verification step that computes a check against content in external storage but then allows an attacker to replace the contents of the storage before it is executed.

Side channel attacks

There are a multitude of side channel attack types; each relies on additional derived information that can help with the (typically) cryptanalysis of a system. For example, looking at the microscopic variations in power consumption of an MCU during a cryptographic algorithm computation can leak small amounts of data.

These cribs can help reduce the keyspace needed to brute force, or even recover plaintext itself.

Side channel attacks are hard to carry out and frequently costly, thus mitigations against them should be weighed against the time and money an attacker would need versus the countermeasures required to keep something secret. A device like a hardware security module or cash machine would warrant the significant development effort.

Privilege levels

In embedded Linux systems, like consumer broadband routers, it is still very common to see all processes run as root. Where a vulnerability is found in network-accessible services, especially management web applications, this can then lead to total compromise of the whole device.

RTOS has a less comprehensive user privilege management concept but still has privileged and user modes. Tasks in user mode are blocked from accessing or executing certain functions, but it should still be assumed that there is realistically significant access from any user-supplied input.

7.3. Releases

Code and firmware signing is an important step in releasing code, but control should be maintained over a production release. Arbitrary signing capabilities should not be available to all, and development signing certificates should be separate from production. Ideally an offline step is used with a securely stored signing key, although this may not always be practically possible.

Signing keys should never be released to factories, but verification to ensure unsigned or other-signed code cannot run, should be performed in case a factory modifies the secure boot chain.

8. Cloud Ecosystem

Connected embedded systems (IoT) generally communicate with some form of cloud services, either directly or through mobile applications.

These cloud services – typically APIs – offer an attacker a larger surface than a single physical device that requires local attacks. Cloud services frequently contain sensitive data like logins or age verification, that have value to an attacker. This value can either be in resale value, or by hacktivists seeking to further a specific agenda.

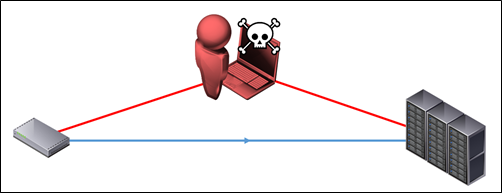

A simplistic IoT threat model generally includes only a home user’s internet connection, cloud services, and an opportunistic attacker somewhere in the middle:

Figure 20: Naïve attack view

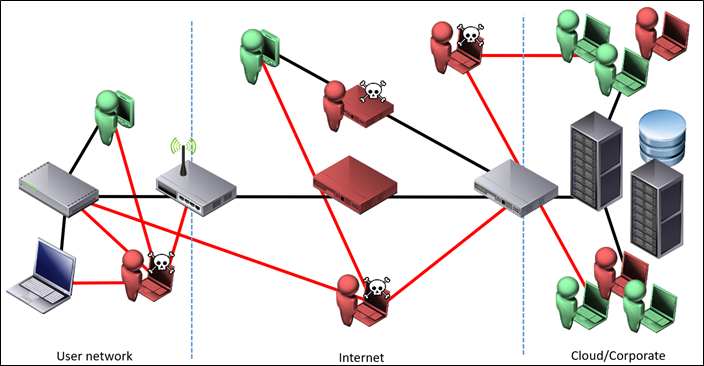

Whereas more realistically it is a complex attack surface at multiple stages:

Figure 21: Real world attack view

Attacks can not only come from threats on a home user’s network, intermediate stages on the internet, but malicious insiders on both corporate staff and third parties with access.

A major consideration should be any administrative or backend views of any cloud services. Typically, there is a dashboard for analytics or customer services, or at the very least users with significant access to the cloud account who can export data via backups or into a storage bucket. Any administrative access must be well secured.

Many cloud platforms offer insecure-by-default services like storage buckets and elastic search and there is often a significant temptation to export live data into these services “temporarily”. Due to the volumes of sensitive data found in these services, tools exist https://buckets.grayhatwarfare.com/ to enumerate and search them at scale, so despite the often obscure URLs needed to access them they will be found in short order.

Comprehensive controls are needed to separate development from production and to ensure no single person could expose services and data directly to the Internet. Logging should also be in place from the first day to allow incident response to analyse any potential breaches.

As discussed in 6.5 Cloud Onboarding, managing large fleets of cloud-connected devices at scale can also be a challenge. PTP have seen several vendors with some form of remote access into IoT hubs and routers. This can be a traditional VPN, reverse SSH tunnel, or private APN on a mobile network. The risks of this are twofold: firstly, that someone has remote access into a customer’s networks, but also that internal company infrastructure could now be exposed to a remote attacker that can leverage the remote connections. Combining the two could even allow lateral movement between customers.

Always-on devices should be securely identified and tied to a customer account, with bidirectional data queued. MQTT is frequently used for such asynchronous messaging in IoT but also carries its own security risks if not implemented properly.

9. Mobile Applications

Companion mobile applications are a common feature of consumer IoT and are used to retrieve data, change settings, and update firmware.

Design decisions will need to be made as to whether cloud-connectivity is required onwards from the mobile application. Storing data can be helpful for users but further analytics should be carried out mindfully.

Any mobile applications should use the best security features available on current Android and iOS devices including hardware-backed secure enclaves and keystores, biometrics, and modern programming classes and libraries. As with TLS, different geographical locations are likely to have different penetrations of Android versions so it may not be possible to mandate, for example, Android 12 and later.

Mobile device storage like local logging, SQLite databases, and writes to external storage can lead to risks of disclosure of sensitive information and authentication tokens, especially if a jailbroken/rooted device is present.

Other normal recommendations apply around authentication and authorisation in APIs, especially insecure direct object references. This is where a low entropy identifier is used (e.g. userid = 123) but that no further checks are made on what a user can access and therefore can access other users’ (userid = 456) data.

Where customer (or other) passwords are handled server-side these should follow best practice hashing mechanisms such as bcrypt, or leverage a ready made solution like AWS Cognito. Local token storage should use hardware security features as above.

Certificate pinning, root detection, and code obfuscation countermeasures can help secure mobile to cloud communications and frustrate attempts at reverse engineering, but both can be bypassed eventually and should not be considered as a primary line of defence.

10. Validation And Testing

Using a structured approach to threat modelling should allow major architectural issues to be resolved before manufacturing and any other significant investments. Security should be considered throughout the design lifecycle, but there still comes a point at which assumptions need to be validated.

Unit and functional testing of code using SAST and DAST goes some way to assisting but ultimately a complete product test of an IoT device, companion mobile applications, APIs, web applications, and cloud accounts will be required. Full testing of these elements may only be needed on day one, or when fundamental changes are made (like an entirely new product line).

Fuzzing can be a rich source of vulnerabilities in embedded devices. A “normal” interaction with an interface or device (especially network services, BLE, and USB) is recorded and a tool used to send the same data with changes overs many thousands over iterations, with the hope that at some point a crash or change in function occurs. At this point the test case and memory contents can be examined to see why this occurred. Fuzzing tools like radamsa https://gitlab.com/akihe/radamsa exist to help automate the process but this can be more challenging when in-memory fuzzing of an embedded device is required. Transferring some testing into an emulator rather than bare metal can help.

Ideally multiple fuzzing tools should be run continuously to provide better coverage, rather than a maximum bound on time.

Splitting testing into manageable chunks can make things easier, but it can also be restrictive and mask vulnerabilities if a whole ecosystem is not considered.

Point in time testing can be useful, particularly when a new product is available, but ongoing sampling is also vital to ensure third party manufacturers are still using the same BOM and that the firmware is legitimate. Some IoT vendors pick products from retailers at random intervals to confirm what is being sold matches what the factory should be producing.

External assurance should be sought where there is a knowledge, regulatory, or policy need for independent verification.

11. Conclusions And Checklists

Secure software development for embedded systems requires a continuous cycle of threat modelling and improvement. Security is not easily integrated solely at the end of a project and should be considered throughout.

Overriding Principles

- Embed security throughout development.

- Threat model and apply consistent qualitative and quantitative risk ratings.

- Protect customer data.

- Include firmware integrity and signing checks.

- Where applicable, ensure hardware-backed security identities are used (and not just serial numbers or MAC addresses).

- Protect, but treat local interfaces like Wi-Fi and BLE as open carriers and apply additional security to contents.

- The compromise of one local device should not give access to all devices – the “break once run anywhere” (BORE) attack. Any cryptographic material should be unique per device.

- Use the strongest available, public, cryptographic algorithms.

- Keep up to date with vendor-published vulnerabilities and state of exploitation techniques.

- Test an entire device ecosystem and not just unit test single elements.

Check Lists When Using Third Party Hardware

- Verify software and hardware BOMs are as designed.

- Verify all debug access (UART, JTAG, SWD) is closed.

- Verify secure boot (all boot phases are signed, unsigned firmware doesn’t run).

- Secrets are appropriately protected.

- Validate samples at random from retail and production line.