We’ve been doing automotive pen testing for several years now. Along the way we’ve had some fascinating experiences, working with some insightful and forward-thinking OEMs. But we’ve also worked with some OEMs and suppliers that consider pen testing to be a box checking exercise and frankly, buried their heads in the sand when confronted with concerning security flaws. Or they’ve limited scope to make useful discovery impossible.

Security testing aims to find vulnerabilities

A vulnerability is a weakness that could be exploited to perform unauthorised actions on a computer system. Vulnerabilities may be mitigated to a point if certain conditions are met, such:

- Physical access – access to one or more devices is required to exploit the vulnerability.

- RF range – must be within radio frequency range.

- Authentication – a set of valid credentials are required e.g. a Wi-Fi password for a hotspot.

- User interaction – a user of the system must carry out an action e.g. click on a link.

Once found, one needs to decide how to handle these vulnerabilities. One might:

- Fix the vulnerability – preventing it from being exploited. Perhaps by upgrading to a later version of software that does not include the vulnerability.

- Mitigate the risk – places barriers in place to make the vulnerability harder to exploit or reduce the impact if it is exploited. For example, a CAN gateway.

- Accept the risk – decide that they would rather leave the vulnerability, acknowledging that it exists.

Accepting risk is often seen as an undesirable option in automotive. Perhaps this stems from a safety culture, where safety-related issues might prevent a product from reaching the market. Security is not binary though; there is no such concept as a secure product, just degrees of risk.

As a result of this, testing is sometimes artificially constrained to prevent issues from being discovered. By limiting a security test, fewer issues are found, fewer risks have to be accepted or mitigated, reducing time to market.

“Out of sight, out of mind” would describe this attitude. It is harmful and high-risk and is often experienced in less forward-thinking automotive organisations.

Head in the sand. How automotive pen testing is sometimes constrained

Examples of constraining testing to limit findings might include:

- Discovery of a hardcoded password across all devices, stored as a hash, but not providing the password to the consultant during testing. Given time and resources, it would be possible to obtain the plaintext password, either by brute force or another method (such as a breach of development systems). Providing the password to permit access would provide a better depth of testing and avoid wasting time.

- Code readout protection (CRP) preventing access to firmware. Given time and resources, firmware can be recovered from most devices, and then reverse engineered. New CRP bypass methods are discovered over time, thereby completely changing the security profile of the system. This can result in issues being discovered such as backdoor access, hardcoded keys, or debug functionality. Giving direct access to firmware allows testing to progress faster.

- Source code access. Whilst compiled firmware can be disassembled to determine function and find vulnerabilities, this can take a long time, especially on large systems (e.g. IVIs) or ones with little information available (e.g. some CAN gateways). Source code access can allow the consultant to quickly assess the significance of a finding, raising or lowering its importance

- Limiting scope. For example, taking backend services out-of-scope so that they cannot be tested. This may be due to legal or supplier issues, but an attacker is not limited in which actions they can perform. OEMs would surely want to know about a backend platform security flaw that allowed remote compromise of entire fleets of vehicles. Yes, we’ve found exactly these issues on customer tests.

The real world of testing

There are several realities about security testing that should be accepted:

- Testing is always limited in time.

- Deeply hidden vulnerabilities may not be uncovered. There are hundreds of examples of issues in widely deployed software and hardware than have existed for many years without being found. Heartbleed would be a great example; an issue in SSL that remained undiscovered for over a decade.

Because security design testing cannot guarantee that it will prevent all vulnerabilities, defence-in-depth methods should be used. This means that multiple security mechanisms are put in place to protect assets. There are many clear examples of this being used in systems:

- Password hashing – passwords are stored hashed, so that even if the data is leaked, the passwords cannot be determined.

- Firewalling (or gateways) – a firewall can protect machines on an internal network from being connected to from the Internet, in the event that any of the services can be compromised.

- Key diversification – devices use different keys so that even if one is compromised, the keys cannot be used to compromise multiple devices.

Just because a total system compromise is not found during a security test does not mean that one will not be found in the future. 10 vulnerabilities could be identified during a test, but it may take the 11th to permit total compromise.

Multiple layers of defences can help ensure that the 11th vulnerability is not critical to the security and safety of your vehicles.

High risk attack classes

In terms of risk to automotive systems, there are several classes of attack that stand out as high impact:

- Break-once Run Everywhere (BORE) attacks – for example, obtaining a hard-coded password common across all IVIs. The password could be spread and used by many to perform other attacks.

- Centralised attacks – this might include telematics platforms, software and firmware updates etc. These could allow multiple (or even all) connected entities to be compromised.

It is vital that testing does not exclude these class of attacks by artificially limiting scope to not include backend systems.

The eventual risk that a vulnerability produces depends on several aspects:

- How hard is the vulnerability to discover?

- How hard is the vulnerability to exploit?

- What is the impact of it being exploited?

As a result, it is rarely possible to say that a system or device is secure or not; all that can be said is that the risk is acceptable. Security cannot be seen as “on or off”.

Making difficult choices. What do you want to discover during testing?

Coverage vs Depth

Coverage relates to the aspects of a system that are examined. For example, an IVI would comprise of physical interfaces, WiFi, Bluetooth, CAN, USB, firmware update, operating system etc.

Depth relates to the amount of time/effort spent looking at each area.

A test should aim to get both good coverage and depth, with a balance struck between the two.

Black/grey/white box

This describes the level of access a tester is given to the tested system.

- Black box – the tester is given the same level of access a member of the public would have.

- White box – the tester is given full access, potentially including source code, documentation, schematics, keys, passwords, debug access etc.

- Grey box – somewhere between black and white box.

Black box testing provides poor value. A large portion of the test may be consumed gaining access to system being tested and performing reverse engineering, leaving little time to find other security issues. A genuine adversary would not stop at this stage, as they are not time-constrained in the same way as a consultant.

White box testing can be challenging to arrange. For example, a TCU may be produced by one vendor, use the cellular infrastructure of a given provider, connect to the telematics infrastructure of another provider, and hold data concerning other manufacturers.

For this reason, grey box testing is the preferred approach.

A period of black-box testing can be carried out to check security mechanisms, such as code readout protection.

Checking boxes rather than finding vulnerabilities:

Risk-based vs compliance-based security testing

Whilst conventional IoT security testing is scoped and described before testing commences, it is most frequently carried out “open-ended”. Testing will follow the paths that are most likely to result in serious impact. It is the experience of the consultant that will ensure that the most likely routes to compromise are followed. Checklists may be used, though the most interesting and concerning findings are often found during ‘free-form’ testing . If entities (infrastructure, applications, or companies) are discovered, they can be brought into scope by agreement so as not to constrain testing.

In contrast to this, some industries are highly focused on compliance-based testing. This is where standards, checklists and simple “yes/no” or “go/no-go” tests are the driver. This conflicts with several aspects of security testing:

- Checklists/schedules are often drawn up ahead of testing starting, which does not allow for open-ended testing.

- Security is rarely on or off – it is a sliding scale. A tester identifies a vulnerability; the business must determine the risk and act on this. Checklists do not allow subtle distinctions to be made.

Compliance-based testing is particularly common in industries where safety is a primary driver, or where regulation is strong. This includes automotive, maritime, and chemical industries.

There are several examples of this type of testing going wrong:

- Inappropriate tests – for example, tests for Bluetooth Low Energy on a system that only supports Bluetooth Classic.

- Impossible to meet schedules – a test schedule is drawn up based on obtaining root access to a device, which is not given. As a result, an entire test would be taken up trying to obtain root access and not performing the actual security tests.

- Constraining tests – checklists are often based on previous testing of older generations of a device, or similar classes of device. This result in tests for vulnerabilities that existed in other devices being applied, even when they are not appropriate. For example, performing a review of a Linux system on a Windows CE based IVI.

And yes, we’ve been presented with highly constrained test scopes that include all of the above and plenty more!

It is recommended that open-ended testing is used on all systems. Good customer communication means that the coverage is acceptable, and the direction testing taken is appropriate.

Time-boxed vs goal-oriented

Nearly all testing is time-boxed: a given period of time is provided for testing.

The period of time must be appropriate for the system being tested. It is better for a test to be too long than too short.

For complex test, consider a reserve period of testing. This can be used as a top-up if low-coverage has been achieved in some areas.

Goal-oriented testing will follow a list of goals, with the testing not being complete until the goals are achieved. This works well with checklist-based testing.

System vs component

The denotes what aspect is under test.

If it refers to the scope, it can be helpful. For example, if a TCU is being tested, constraining the scope to the component will prevent time being wasted on other aspects of the car.

If “system or component” refers to the provided equipment, then it tends to be unhelpful. Testing a component, totally standalone (without any other devices to power or stimulate it) will generally result in slow progress and very low coverage/depth whilst time is spent trying to reverse engineer it.

In general, tests should never be carried out with a single, standalone component. This can result in all time being occupied by simply making the device work as intended.

Testing a component as part of a system is sensible and productive.

Practicalities of testing

Destructive vs non-destructive

It should be accepted that devices are likely to be damaged during testing and almost certainly cannot be used safely in a vehicle.

Non-destructive testing severely limits testing and slows down what testing can still be performed.

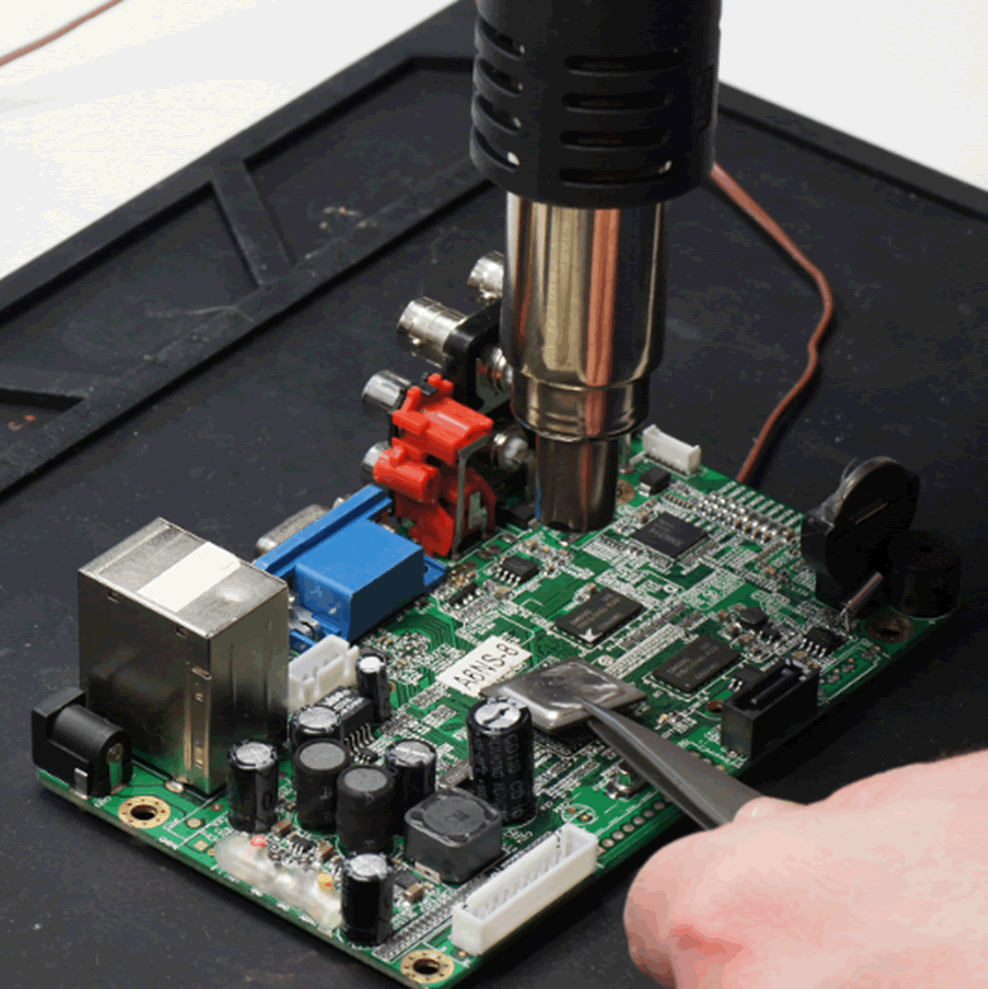

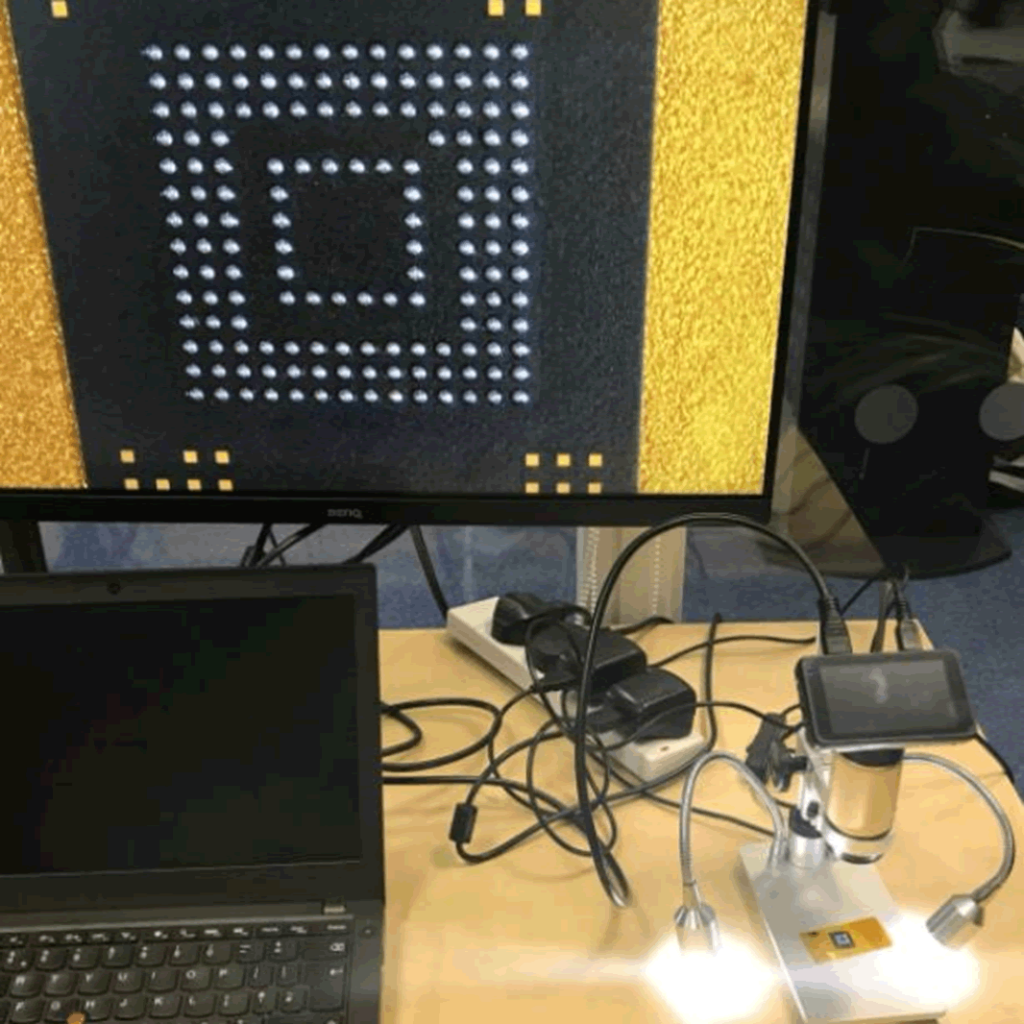

For example, if firmware recovery required chip-off testing to be successful, the chance of reballing and replacing the chip and it operating in future is limited. Therefore, the chance of meaningful security review being carried out on the firmware is also limited.

Authorisation

All entities and components in the system require authorisation to perform security testing.

This can become complex when dealing with large systems, where an ECU may be made by a vendor, use a SIM card from another provider, and interface with a backend managed by someone else.

It is the customer’s responsibility to determine which parties must authorise testing; this should happen weeks in advance of a test.

Incorrect or missing authorisation can severely hamper a test.

Scheduling

Most tests will follow several phases:

- Setup – connecting the equipment and getting it working.

- Basic observation – determining, from a high-level, how the system works. This guides the next stage.

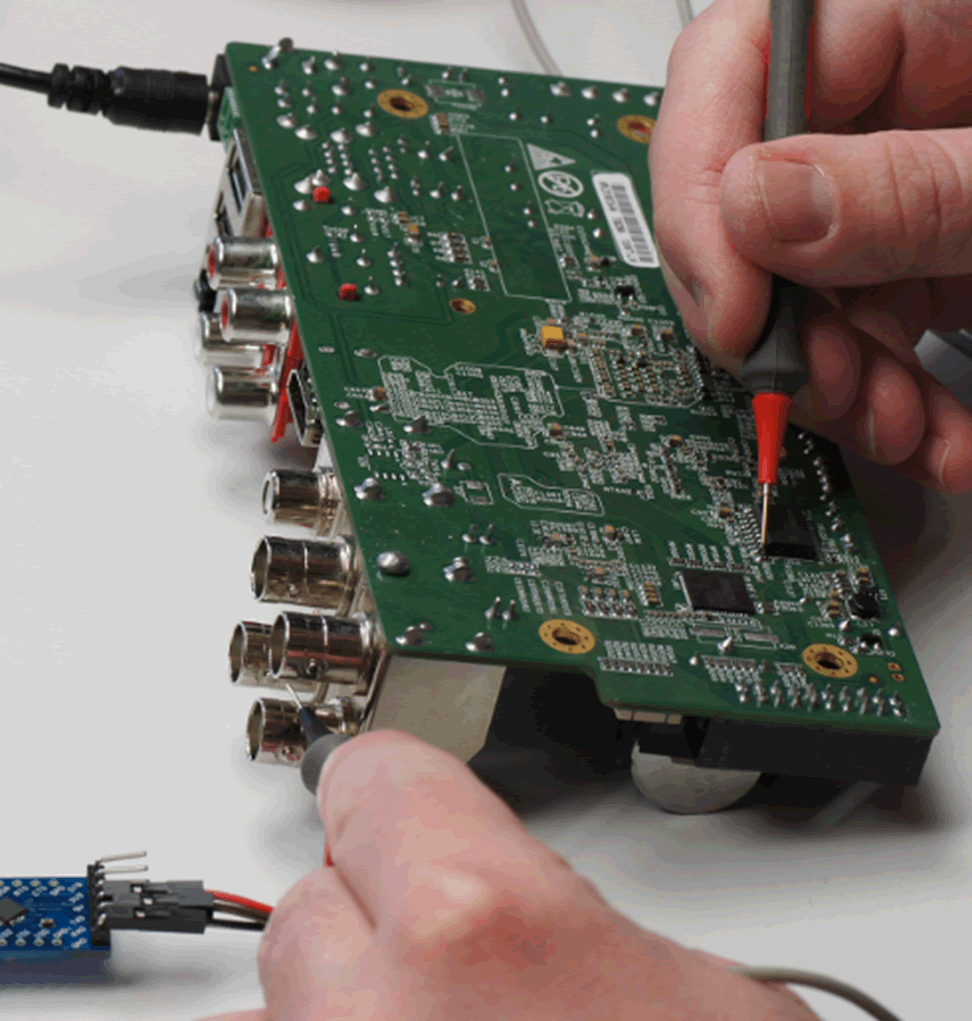

- Instrumentation – using various tools (logic analysers, CAN monitors, oscilloscopes, serial adapters, WiFi access points.

- Detailed observation – using the instrumentation to observe, in-depth, how the system operates.

- Attack planning – using the observation to determine which attacks are appropriate.

- Attack – carrying out the attacks.

- Reporting – documenting the attacks.

In general, there will be several days required for the setup, basic observation, and instrumentation of a system before work can begin finding deeper vulnerabilities.

Reporting can take a variable amount of time, typically depending on the type of testing.

Lab space

An ideal scenario is one when your tester has proper testing facilities of their own. At Pen Test Partners we have a lab with space for two cars at the same time.

Note: Yes, we know the pictures here aren’t all of car components, but we can’t show a lot our work due to client confidentiality.