WannaCry shouldn’t have been as much of an issue as it was. Perhaps people had forgotten what was learned over a decade ago about managing worm outbreaks.

Here’s how I learned to prepare a network to cope with malware infections better. I’ve written it as a case study, to show why I implemented the various controls and how they helped.

Blaster; early 2003

First mistake; asking individual departments to patch their own machines. This was widely ignored, or people didn’t understand the urgency of the situation. Or politics. I should have at least verified the patch level, even if I hadn’t got permission to push updates out.

Only about a quarter of the desktops had been patched by the time of the outbreak; all the servers we managed were patched.

The first signs of infection by Blaster were early on Tuesday 12th August. We observed as portscans across our network, from two separate hosts – one internal and the other a home machine which was connected via dial-up networking. Fortunately I could track these on a recently installed snort IDS, plugged into a SPAN port on our core router.

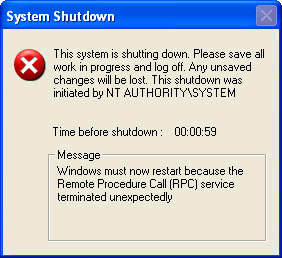

The worm’s portscan traffic increased from near zero at 7:40am to around 20,000 packets per minute by 8:40am. By mid-morning, the network was sluggish and blocking the transmission vector of the worm (the tftp protocol on port 69/tcp) was a treatment which was almost as bad as the original disease. The extra filtering on the routers was done by relatively slow software rather than in the quick hardware, which led to very poor network performance, however, it did slow the spread of the worm.

As the network was basically unusable and the vulnerable computers kept rebooting due to the worm’s constant attempts at infection, computers were patched by hand for the most part, with the IDS being used to track the infected hosts, and later on to initiate automated clean-up scripts.

It was obvious that we needed to make major improvements in patching and automated response to prevent this from recurring on such a large scale.

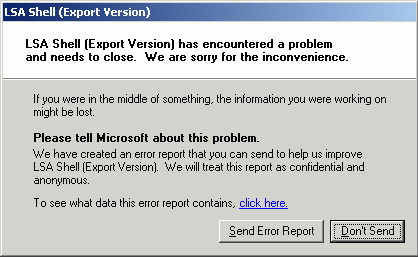

The worm was able to enter the network due to poor access control between the external and internal networks – remote access and personal laptops were both possible vectors for the worm to be carried behind the security perimeter. The lack of a patch management system for desktops meant that there was a very large vulnerable population for the worm to target. So also on the list was stricter filtering between inside and outside to at least buy us some time before another worm got in.

Welchia; late 2003

A custom-written patching solution had been deployed by the time Welchia was released, although it took a few weeks to roll a patch out to 90% of the desktop computers. However, there were still sufficient machines to crash the network for several reasons:

- The patch solution was not 100% effective due to many misconfigured machines. Some administrators had deliberately removed domain administrators from the administrators group on their machines. Other machines had insufficient disk space to copy patches, or did not have the standard administrative shares set up.

- Welchia had a more aggressive scanning routine that could exhaust the spare memory on the core router with a smaller infected population.

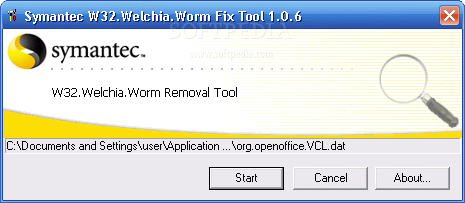

This time the automatic response scripts were quickly adapted from the ones used during Blaster. These killed off the worm’s process, deleted the executable and registry keys used to start it. They were invoked after a particular host had exceeded some threshold for ICMP activity, which was the scanning method used by Welchia.

The escalated response, for hosts that could not be cleaned automatically, was to disable the Ethernet port on the nearest upstream switch – a MAC address to switch mapping was maintained by an automated script for this purpose, and we had a good mapping of IP address to asset number, and hence the people responsible for that host.

By disabling the switchport we forced a call to the help-desk where we could intercept and remediate the issue.

The remote access services had been moved behind an internal firewall, to prevent Blaster being re-introduced by a careless user. However this time the worm was brought inside the perimeter on someone’s laptop. This hole was much harder to plug as lots of laptops were personal equipment and there were many hundreds which were allowed to connect to the internal network. Because of this there were sporadic outbreaks of Welchia until it deactivated on January 1st 2004, though none of these were anything like as disruptive as the first Blaster infection.

The patch management system was by no means ideal, but it had drastically reduced the vulnerable population. This in turn allowed the network to remain functioning most of the time, which allowed network-based countermeasures instead of entirely manual intervention for infected computers. The preferred solution would have been to install a management system such as Altiris, HfNetChk, UpdateExpert, etc. and to disconnect any machines that did not meet basic security standards from the network. This proved problematic as no policy decision was forthcoming about whether central IT support were allowed to do such a thing, and because of financial constraints.

Sasser; 2004

Fortunately, I learned of the existence of Sasser by reading the diary on the Internet Storm Center website one Sunday night. This gave roughly 12 hours to prepare for the probable Monday morning appearance of the worm on our internal network. Most machines had again been patched by this stage.

By now, the IDS had a patch added which allowed it to attempt to disrupt TCP connections by sending spoofed RST packets. A rule was added to apply this functionality to the FTP transfers- Sasser used it as the method of copying the worm’s code between hosts. The automated tracking and cleaning scripts were written from descriptions provided by ISC, Symantec and other vendors. Thanks to the early warning of this worm, disruption was minimal.

The worm did indeed get in first thing Monday morning, but because the remediation measures were effective initially, the network remained stable and thus the remediation measures continued to be effective. Had the infection been somewhat larger, and had the network collapsed as a result, we would have been back to running around and patching hosts manually.

There is nothing like being notified of infected machines within a few minutes of them trying to spread an infection. And there is nothing quite like being able to drop a machine off your network within minutes of the IDS mailing you about it.

So overall, we regained some degree of control over the network by:

- Knowing what our assets were and what the IP address, owner and patch level were

- Reducing the vulnerable population of machines by quick and effective patching

- Automated clean-up of infected machines where possible

- Disconnecting machines that couldn’t be patched and were showing signs of infection

- Gaining some degree of visibility and control over the network by introducing IDS/IPS solution

- Segmenting the network where possible; in this case, separating dial-up and VPN users from the rest of the desktop population

In order to do this, at least a few of the sysadmins needed some time which wasn’t dedicated to firefighting or assigned to other projects, in order to start building the tools used to respond to future attacks. Too often I visit places where everyone is flat out dealing with day to day operations which leaves no time for forward IT planning.

In the case of ransomware, you will also benefit from giving people Write access to only the folders and file shares that they really need – which is part of the principle of least privilege. We had a very good backup system, so at any point there was a weekly full backup of servers on tape plus daily differentials.

If you’ve got a good boss who can deal with the internal communications while you do the necessary technical stuff, that will really help as well.

Doesn’t that sound familiar! Almost the same advice being given this weekend around WannaCry

14 years later and some companies have forgotten the lessons learned in 2003/4 with these worms, and again with Conficker.