… and keep a domain password auditing service online.

Making money on GPUs, the hard way…

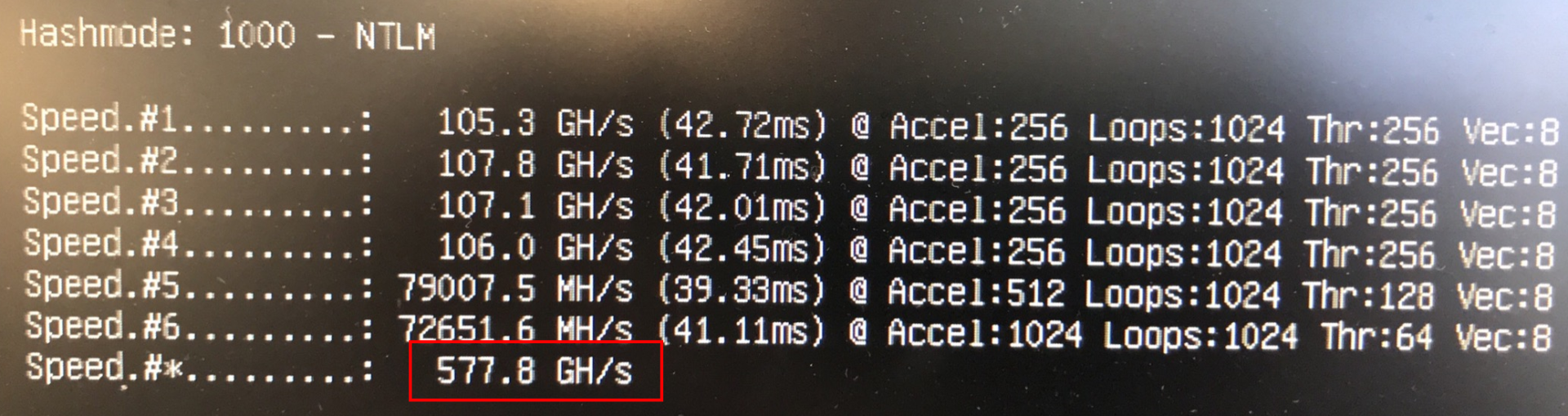

At PTP we had a fairly decent GPU password cracking box called Titan. It used 4×1080 GPUs and had an NTLM hash rate of around 180GH/s. Several years ago I realised that the box was sitting idle much of the time, and we should offer a service to customers where they can use the idle time of the password cracker in order to perform a password audit of their Windows AD domains, and thus Papa, the PTP Advanced Password Auditor was born.

The problems

Over time the demands on Titan became unsustainable – customers started using ThreeRandomWord passwords which we needed to crack, the PTP consultancy team grew rapidly, and Papa was using much more of Titans resource. We didn’t just need one new server, we needed a dedicated Papa server, a more powerful general-purpose server, and a backup! There were just three problems:

- It was a year into the pandemic, and we faced lockdown constraints

- There was a worldwide chip shortage

- Cryptocurrency was at an all-time high, so miners were buying up the GPUs before they made it onto shelves

nVidia deals us a blower

GPUs come with different kinds of cooling options. You can have air cooling or water cooling. Water isn’t practical for 2U servers, so we’re left with fan cooling. That can be performed in two ways – with open-air fans which blow air directly onto the GPU, and blowers where the air flows across the card and out the back.

Blowers are the only answer in short height servers where components are tightly packed. The air needs to flow through the case front to back without being moved in different directions as you would have with an open-air cooler.

The RTX 3080 was our card of choice and offered excellent cracking performance for the money and fitted our thermal constraints. Each card was twice as fast as our old 1080 cards, and we wanted twice as many cards! When the card was released, manufactures produced this card in the blower version (now called Turbo for the 3xxx series).

Unfortunately, a short time after initial production runs, they took the decision to cancel that format and blowers were only available on their higher end cards. Those cards didn’t offer any extra cracking performance, but they did have a lot more RAM. Whilst they may be the best choice for machine learning tasks in a datacentre, they were completely unnecessary for us. This made getting 3080 blowers impossible to purchase from official retailers. Did I say there were just three problems…?

Shopping

We shopped around, and some suppliers were claiming to have stock of GPUs, but when pushed for 12 GPUs they couldn’t fulfil the order. They did manage to supply us with two very nice Gigabyte Epyc G292-Z20 servers though, and I’m very grateful for their help. These boxes are 2U high yet take eight full size GPUs! Perfect for high density GPU compute. So, we had the servers, but no GPUs…

Paying the scalpers

After months of waiting on several suppliers to fulfil orders, we gave in and headed to everyone’s favourite auction site, and tried to snipe some RTX blowers. The 3080’s were too new, too rare, and super expensive, so we settled on some 2xxx series, but we did manage to pick up a few 3xxx series cards where we could, and we started building servers. By September 2021 we found a supplier of 3080’s and ordered four more to complete our last server. Our purchase history over the past six months ended up looking like this:

Papa servers:

- 4x 2060

- 1x 2080

- 1x 3070

- 2x 3080

General-purpose server:

- 2x 2080

- 4x 3080

In total we went from having around 180GH/s of compute performance to just shy of 1TH/s over three machines… and we still have the 1080’s although they are starting to show their age.

Here’s what it sounds like in full flow:

Build process

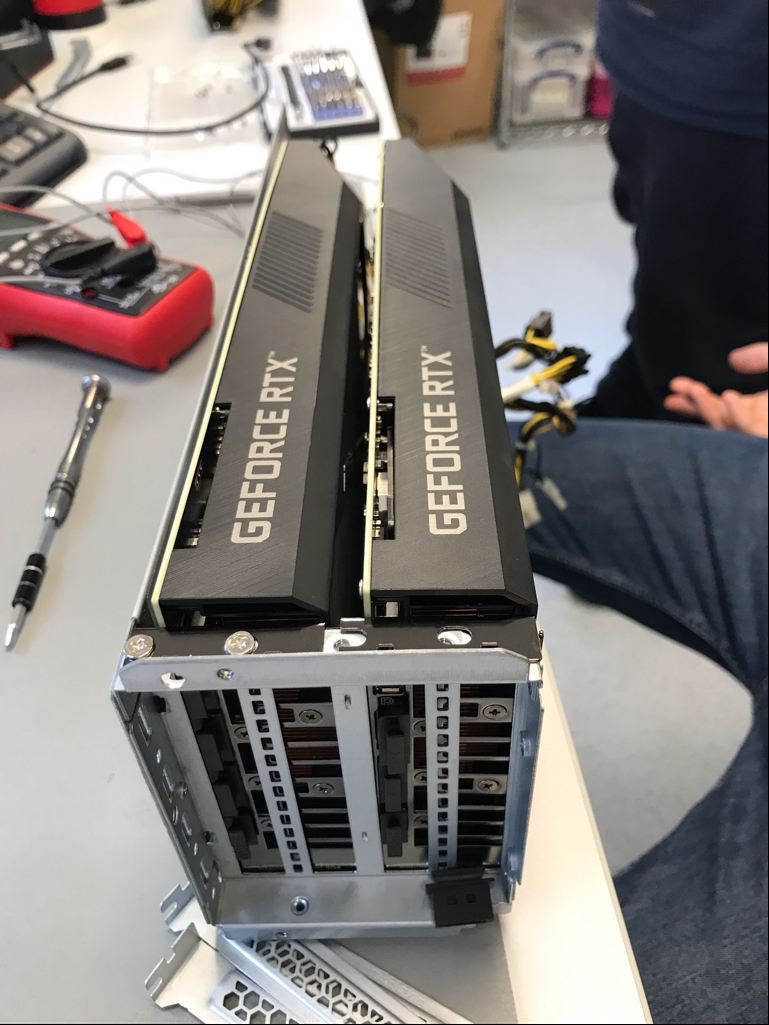

Four of our 3080 turbos:

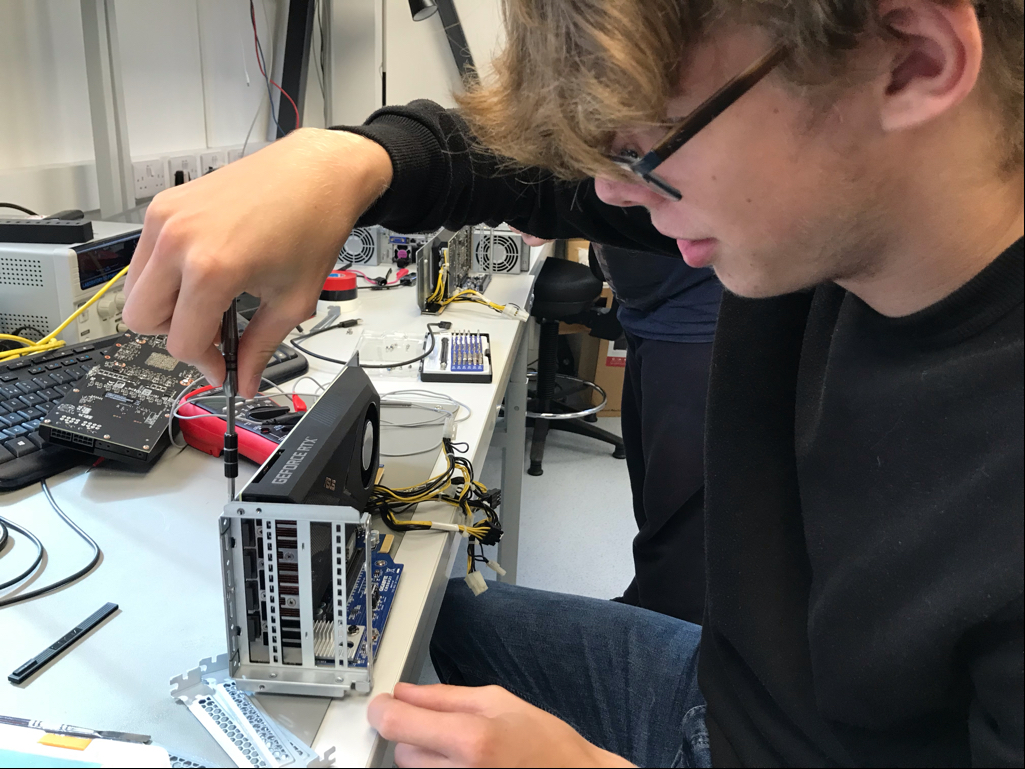

Our Tom installing cards in each server quarter section:

Completed server quarter:

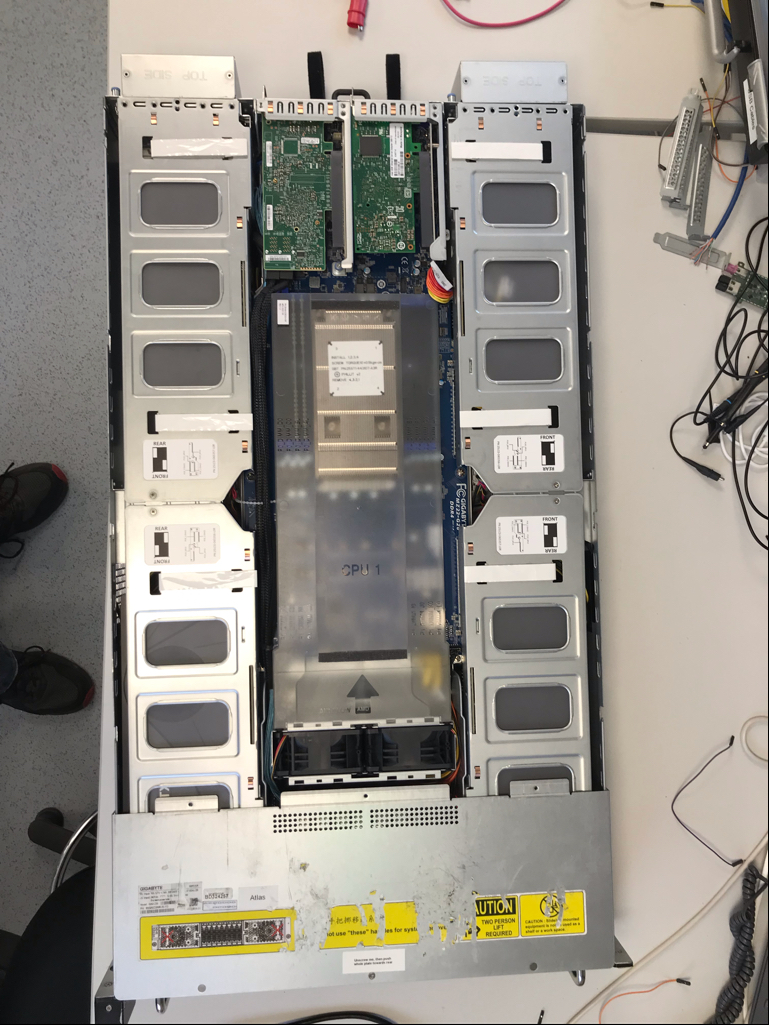

Overhead view showing the location of the 8 GPUs:

Conclusion

Was it worth all the effort? Yes:

It’s been difficult getting GPUs, and the situation with nVidia has made the future of password cracking quite muddy. With the uptick in AI and machine learning, I understand their desire to push higher end GPUs with lots of RAM in the turbo form factor, but it leaves niche tasks such as password cracking in limbo.

We don’t need to run the ethash mining algorithm, but we do want to run them in a 2U server with a corresponding airflow design, that doesn’t cost the earth.

For future builds, ironically, we may need to look at mining rig designs to run non-turbo cards. This will take up a huge amount of rack space, but might be worth it to avoid the expensive high-end GPUs.

Should we have just mined crypto? …it’s a thought 🙂

If you’re interested in seeing Papa in action contact us.